Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

AIP - Archival Information Package

AIU - Archival Information Unit

BLOB - Binary large object

DB - Database

CSV - Comma separated values

CLOB - Character large object

DCTM - Documentum

DFC - Documentum Foundation Classes

DMS - Document Management System

DSS - Data Submission Session

GB - Gigabyte

GHz - Gigahertz

IA - InfoArchive

JDBC - Java database connectivity

JRE - Java Runtime Environment

JVM - Java Virtual Machine

KB - Kilobyte

MB - Megabyte

MS - Microsoft

MHz - Megahertz

RAM - Random Access Memory

regex - Regular expression

SIP - Submission Information Package

SPx - Service Pack x

SQL - Structured Query Language

XML - Extensible Markup Language

XSD - File that contains a XML Schema Definition

XSL - File that contains Extensible Stylesheet Language Transformation rules

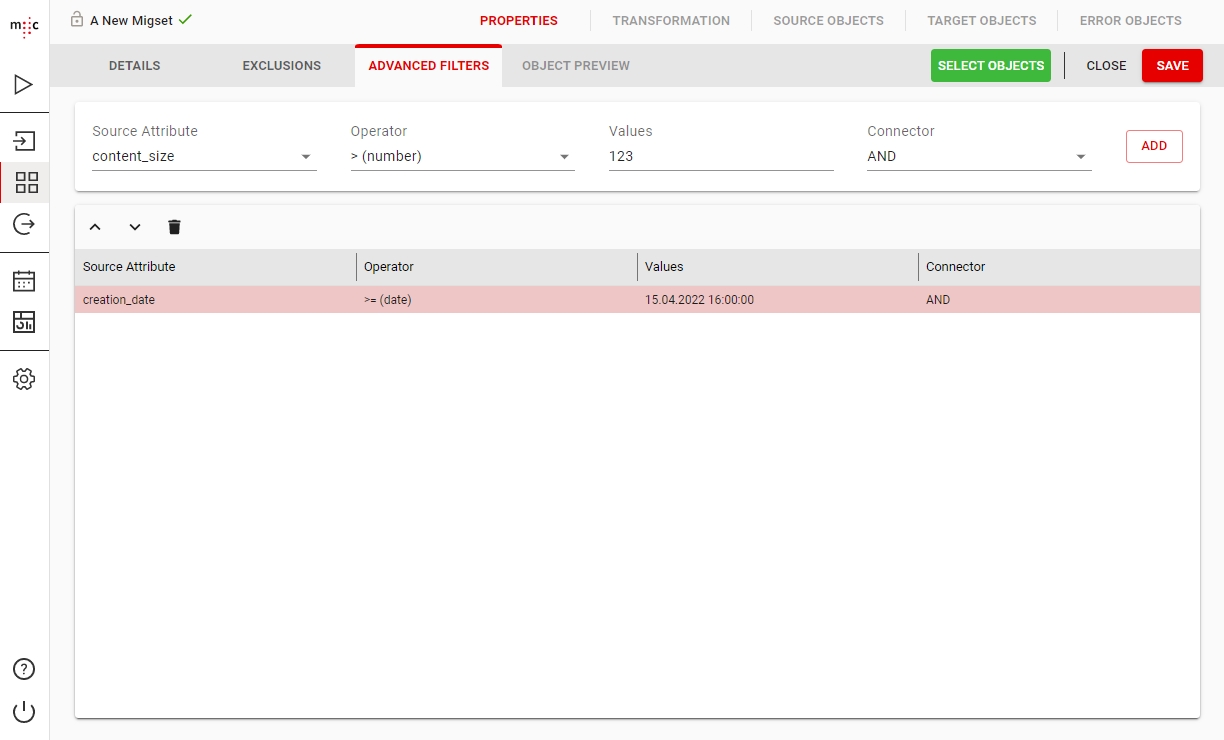

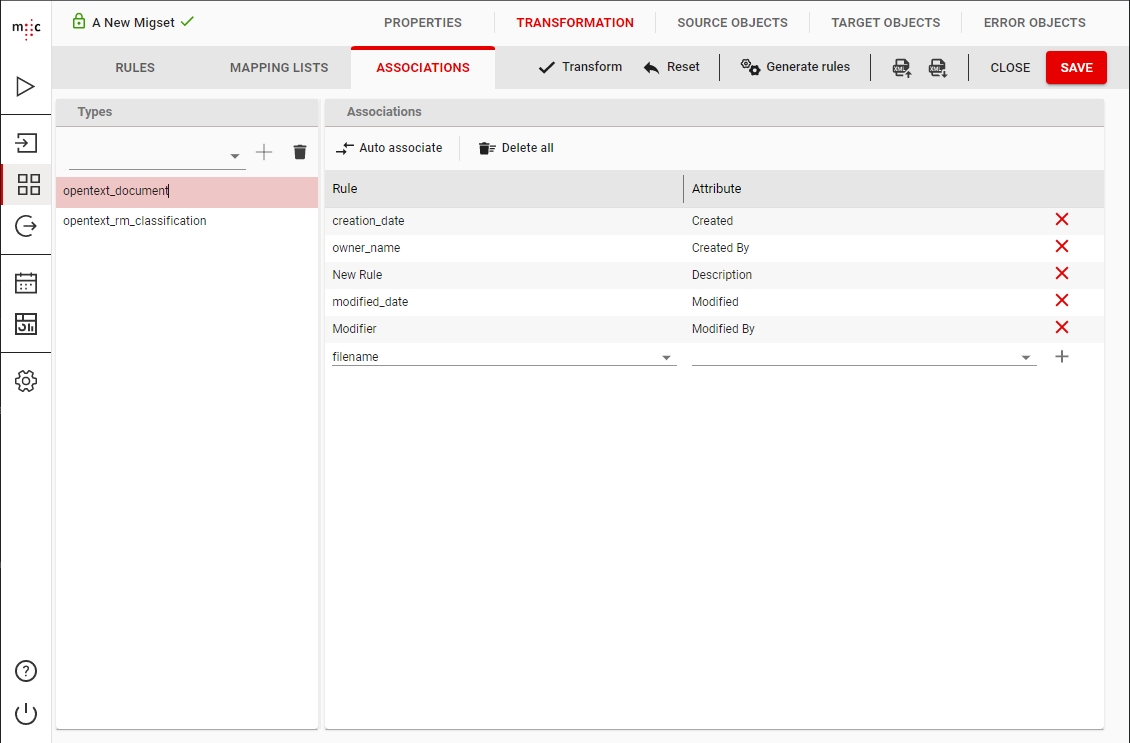

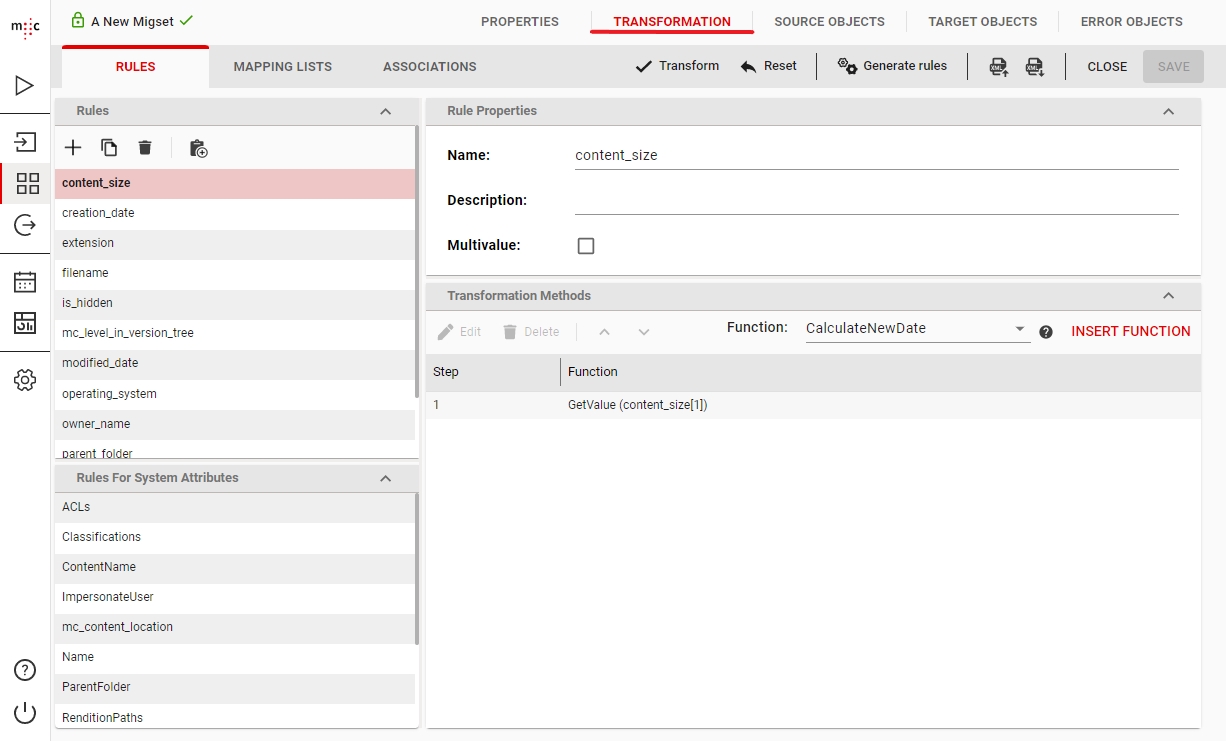

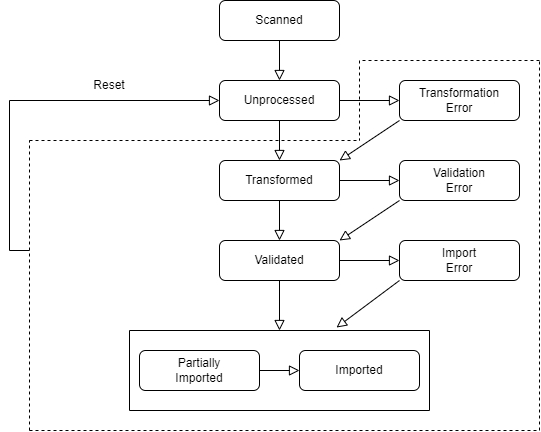

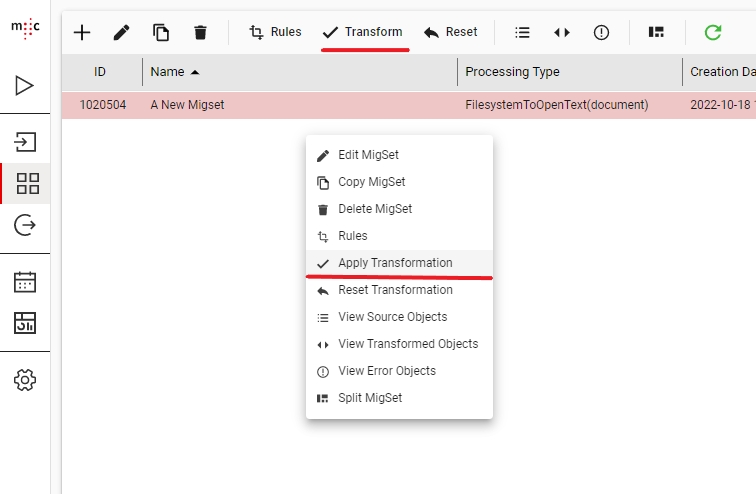

Migration Set A migration set comprises a selection of objects (documents, folders) and set of rules for migrating these objects. A migration set represents the work unit of migration-center. Objects can be added or excluded based on various filtering criteria. Individual transformation rules, mapping lists and validations can be defined for a migration set. Transformation rules generate values for attributes, which are in turn validated by validation rules. Objects failing to pass either transformation or validation rules will be reported as errors, requiring the user to review and fix these errors before being allowed to import such objects.

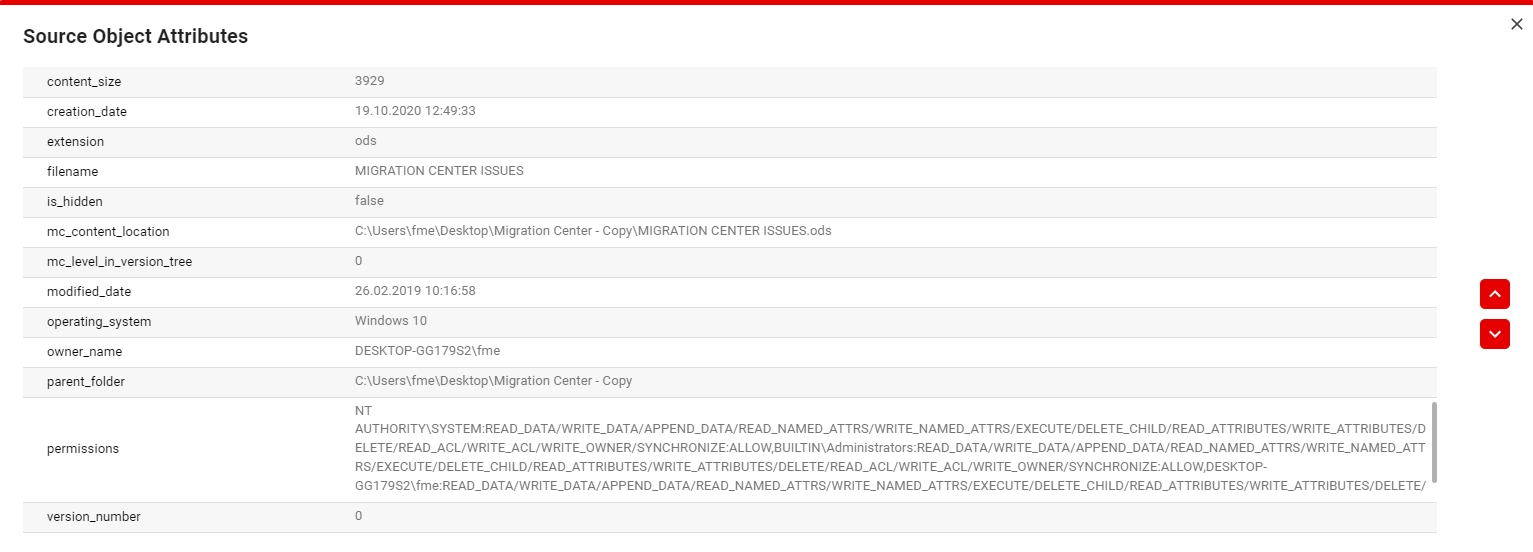

Attribute A piece of metadata belonging to an object (e.g. name, author, creation date, etc.). Can also refer to the attribute’s value, depending on context.

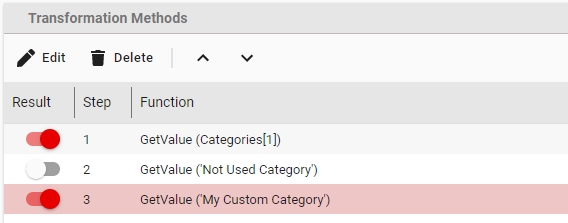

Transformation Rules A set of rules used for editing, transforming and generating attribute values. A set of transformation rules is always unique to a migration set. A single transformation rules is comprised of one or several different steps, where each step calls exactly one transformation function. Transformation rules can be exported/imported to/from files or copied between migration sets containing the same type of objects.

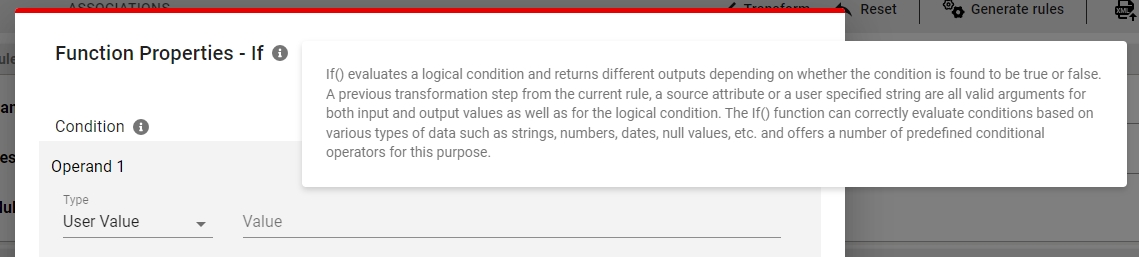

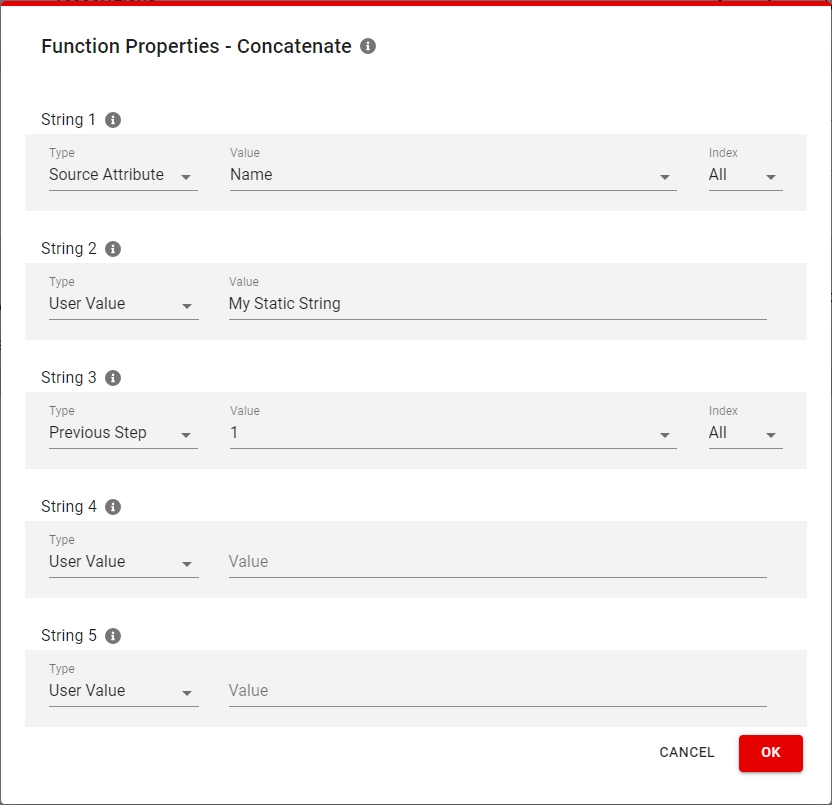

Transformation Function Transformation functions compute attribute values for a transformation rule. Multiple transformation functions can be used in a single transformation rule.

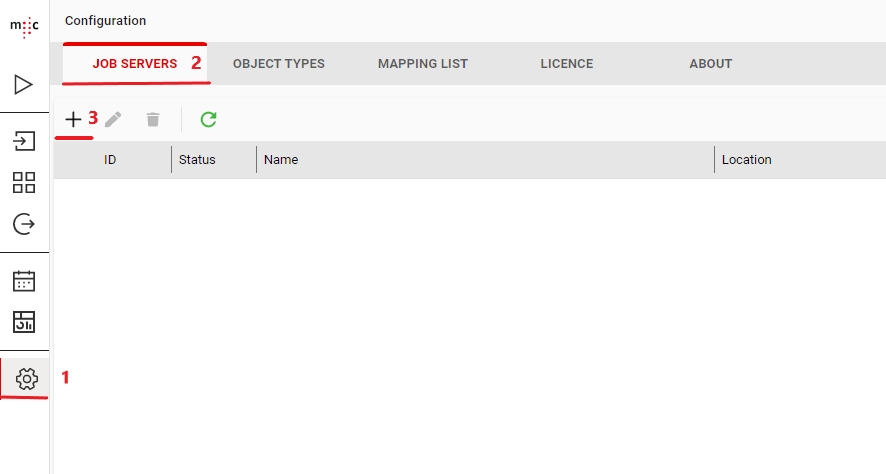

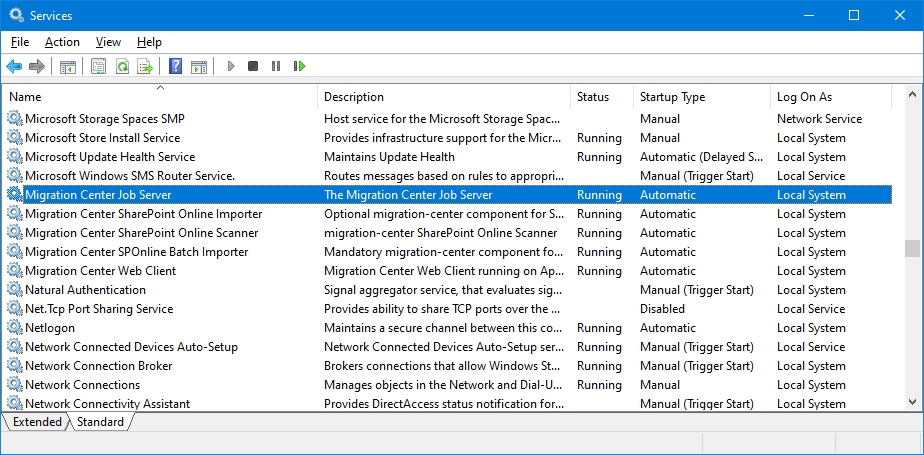

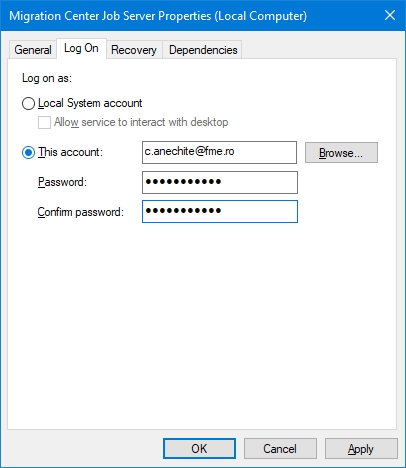

Job Server The migration-center component listening to incoming job requests, and running jobs by executing the code behind the connectors referred by those jobs. Starting a scanner or importer which uses the Documentum connector will send a request to the Jobserver set for that scanner, and tell that Jobserver to execute the specified job with its parameters and the corresponding connector code.

Transformation The transformation process transforms a set of objects according to the set of rules to generate or extract.

Validation Validation checks the attribute values resulting from the Transformation step against the definitions of the object types these attributes are associated with. It checks to make sure the values meet basic properties such as data type, length, repeating or mandatory properties of the attributes they are associated with. Only if an object passes validation for every one of its attributes will it be allowed for import. Objects which do not pass validation are not permitted for import since they would fail anyway.

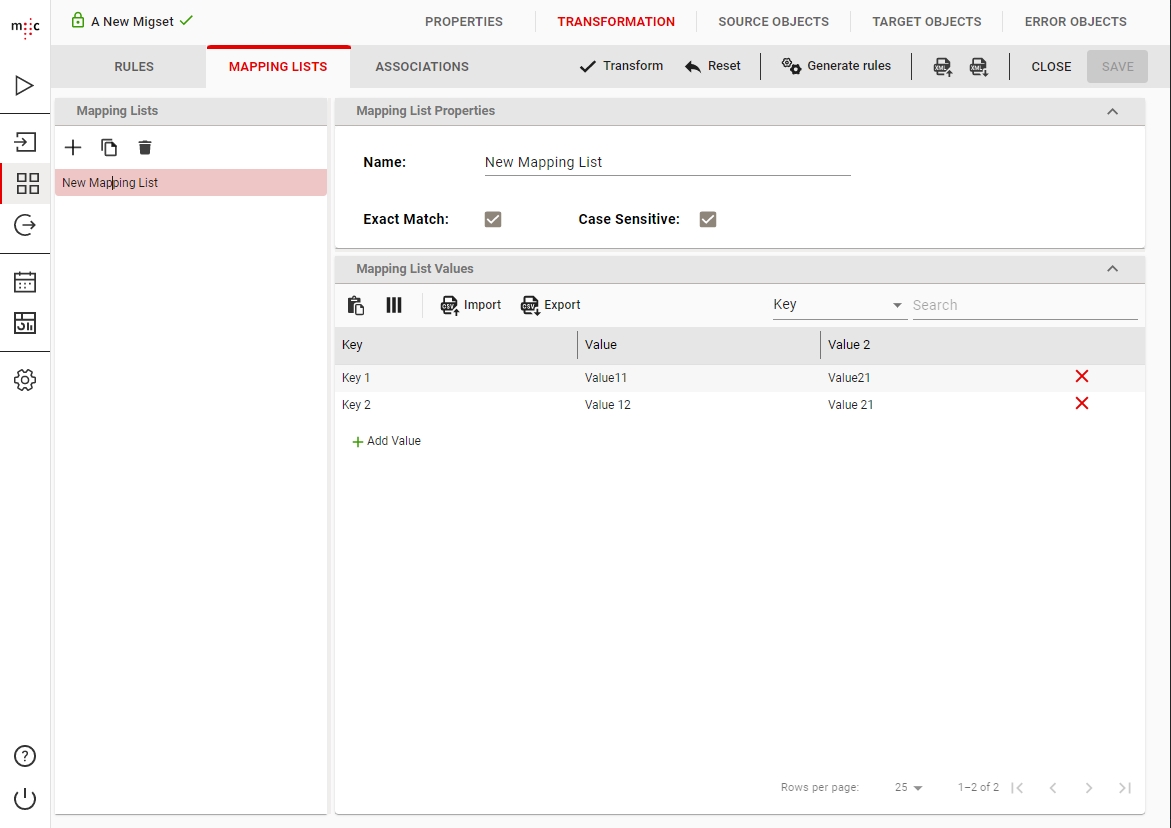

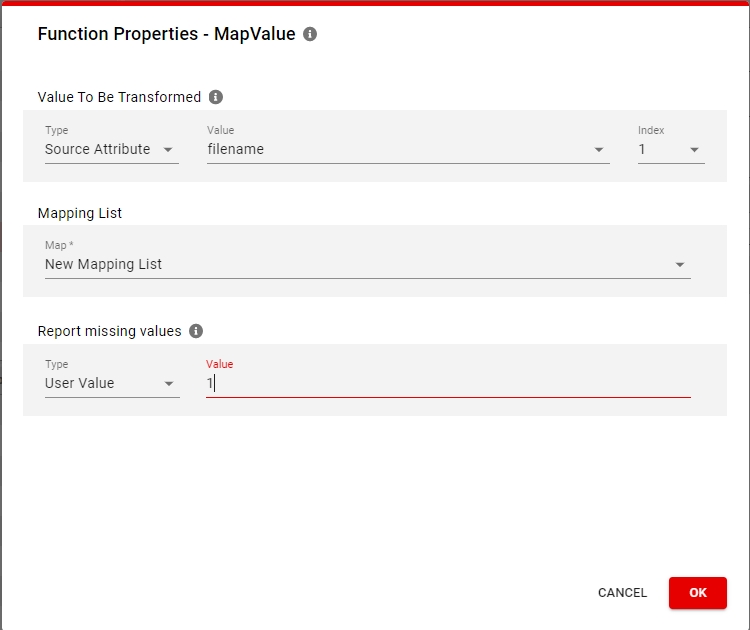

Mapping list A mapping list is a key-value pair used to match a value from the source data (the key) directly to the specified value.

This online documentation describes how to use the migration-center software in order to migrate content from a source system into a target system (or into the same system in case of an in-place migration).

Please see the Release Notes for a summary of new and changed features as well as known issues in the migration-center releases.

Also, please make sure that you have read System Requirements before you install and use migration-center in order to achieve the best performance and results for your migration projects.

The supported source and target systems and their versions can be found in the Supported Version page.

Migration-center is a modular system that connects to the various source and target systems using different connectors. The source system connectors are called scanners and the target system ones are called importers. The capabilities and the configuration parameters of each connector are described in the corresponding manual.

You can find the explanations of the terms and concepts used in this manual in the Glossary.

And last but not least, contains some important legal information.

For additional tips and tricks, latest blog posts, FAQs, and helpful how-to articles, please visit our .

In case you have technical questions or suggestions for improving migration-center, please send an email to our product support at .

Registry Court: District court Brunswick

Register number: HRB 5422

Identification number according to § 27 a Sales Tax Act (UStG): DE 178236072

Our general terms and conditions apply to services provided by us. Our general terms and conditions are available at https://migration-center.com/free-evaluation-copy-license-agreement/. They stipulate that German law applies and that, as far as permissible, the place of jurisdiction is Brunswick.

Reference is made to the European Online Dispute Resolution Platform (ODR platform) of the European Commission. This is available at http://ec.europa.eu/odr.

fme AG does not participate in dispute resolution proceedings before a consumer arbitration board and we are not obliged to do so.

We are responsible for the content of our website in accordance with the provisions of general law, in particular Section 7 (1) of the German Telemedia Act (TMG). All content is created with due care and to the best of our knowledge and is for information purposes only. Insofar as we refer to third-party websites on our Internet pages by any means (e.g. hyperlinks), we are not responsible for the topicality, accuracy and completeness of the linked content, as this content is outside our control and we have no influence on its future design. If you consider any content to be in breach of applicable law or inappropriate, please let us know.

The legal information on this page as well as all questions and disputes in connection with the design of this website are subject to the law of the Federal Republic of Germany.

Our data protection information is available at https://migration-center.com/data-protection-policy/.

The texts, images, photos, videos, graphics and Software, especially code and parts thereof, on our website are generally protected by copyright. Any unauthorized use (in particular the reproduction, editing or distribution) of this copyright-protected content is therefore prohibited without our consent (e.g. license) or an applicable exception or limitation. If you intend to use this content or parts thereof, please contact us in advance using the information provided above.

Here is a list of all the versions supported by migration-center for all of our source and target systems:

Microsoft Exchange

2010

Microsoft Outlook

2007, 2010

Microsoft SharePoint

2007, 2010, 2013, 2016, SharePoint Online

OpenText Content Server

9.7.1, 10.0, 10.5, 16.x, 20.4, 21.4, 22.4

Veeva Vault

Veeva Vault API 22.3

Target System

Supported Versions

Alfresco

3.4, 4.0, 4.1, 4.2, 5.0.2, 5.1, 5.2, 6.1.1, 6.2, 7.1, 7.2, 7.3.1

Documentum Server

5.3 – 7.3, 16.4, 16.7, 20.2, 20.4, 21.4, 22.4 (MC supports DFC 5.3 and higher)

Documentum D2

4.7, 16.5, 16.6, 20.2, 20.4, 21.4, 22.4

Documentum for Life Sciences

16.4, 16.6, 20.2, 20.4, 21.4

Generis Cara

5.3 - 5.9

Source System

Supported Versions

Alfresco

3.4, 4.0, 4.1, 4.2, 5.2, 6.1.1, 6.2, 7.1, 7.2, 7.3.1

Database

any SQL compliant database having a (compatible) JDBC adapter

Documentum Server

4i, 5.2.5 - 7.3, 16.4, 16.7, 20.2, 20.4, 21.4, 22.4 (MC supports DFC 5.3 and higher)

IBM Notes / Domino

6.x and above

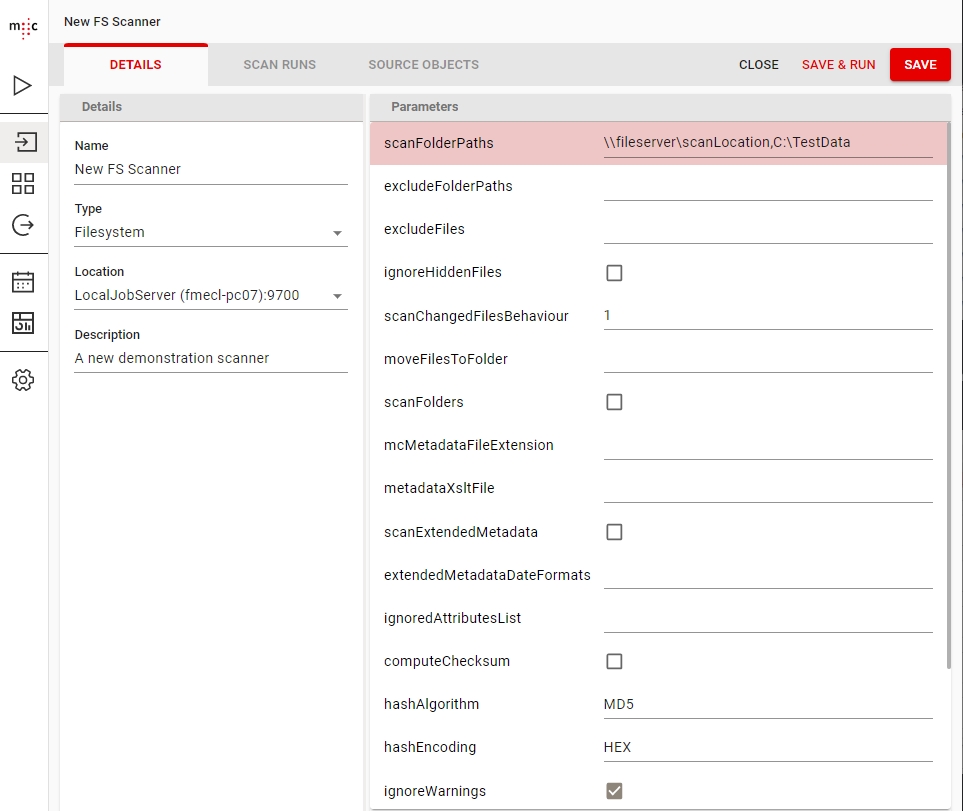

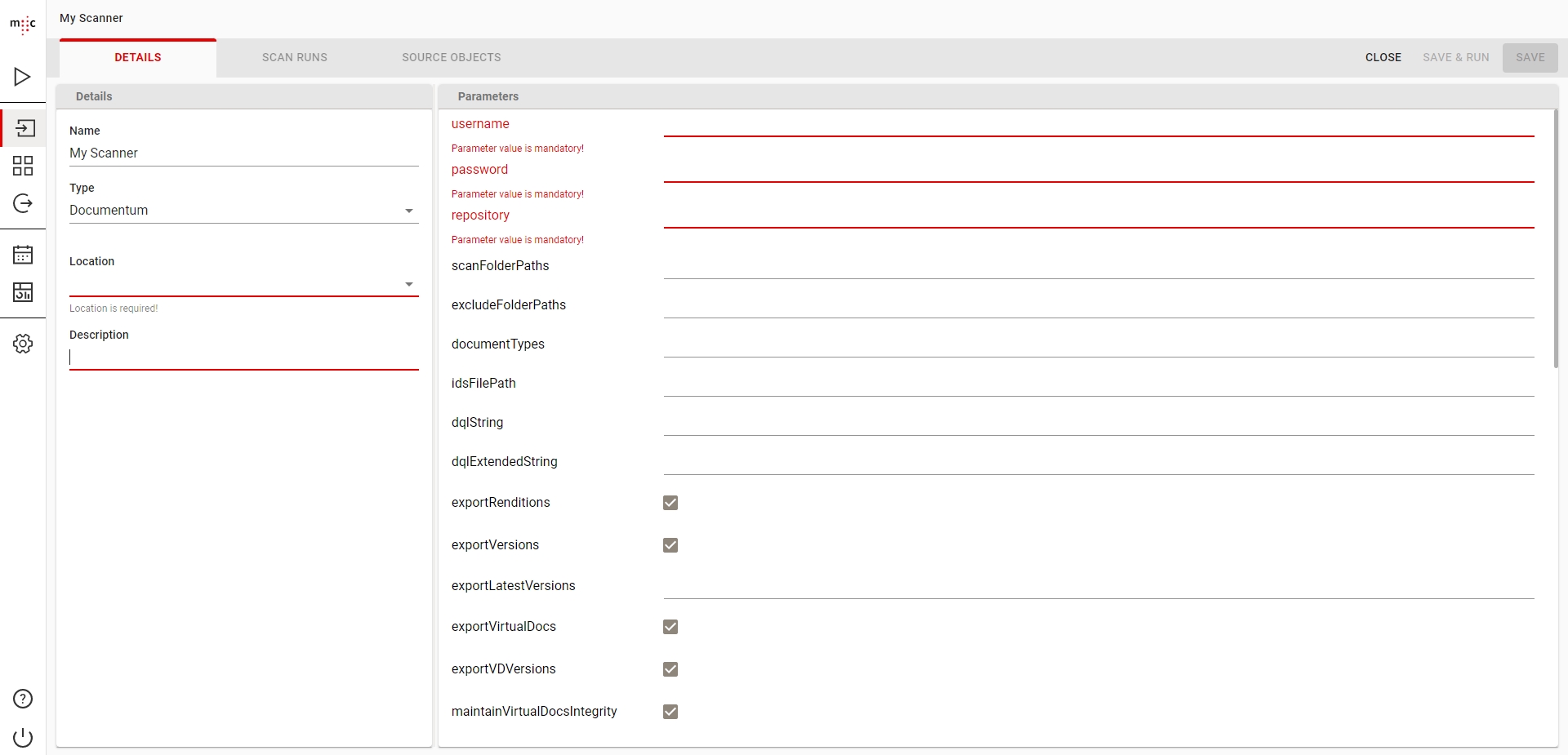

Adapter type*

Select the required connector from the list of available connectors

Location*

Select the Job Server location where this job should run. Job Servers are defined in the Jobserver window. If no Job Server was selected, migration-center will prompt the user to define a Job Server Location when saving the scanner.

Description

Enter a description for this scanner (optional)

Parameters marked with an asterisk (*) are mandatory.

All adaptors have the loggingLevel parameter which sets the verbosity of the run log.

The loggingLevel parameter can have one of 4 numerical values:

1 - logs only errors during scan

2 - is the default value reporting all warnings and errors

3 - logs all successfully performed operations in addition to any warnings or errors

4 - logs all events (for debugging only, use only if instructed by fme product support since it generates a very large amount of output. Do not use in production)

Hyland OnBase

20.8.x

InfoArchive

4.0, 4.1, 4.2, 16.3, 16.4, 16.5, 16.7, 20.4

Microsoft SharePoint

2013, 2016, 2019, SharePoint Online, OneDrive for Business

OpenText Content Server

10.5, 16.x, 20.2, 20.4, 21.4, 22.4. 23.3

Veeva Vault

Veeva Vault API 22.3

General

Show migset name and migset id in the importer run report (#68918)

New Azure Blob Storage Importer (#67191)

Imports documents with content

Sets system metadata on documents

Sets custom metadata on documents

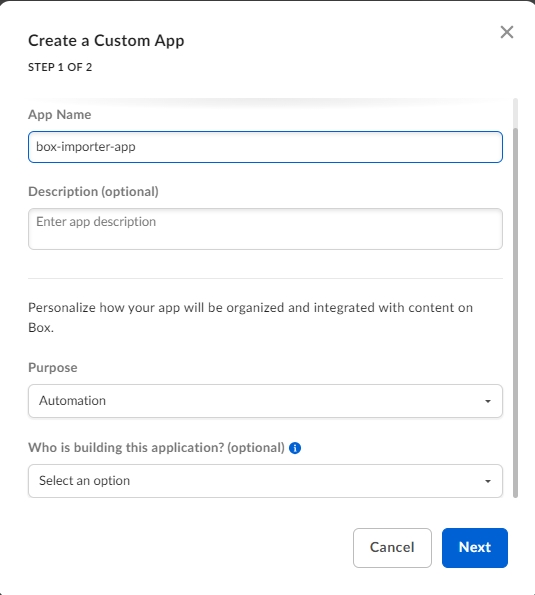

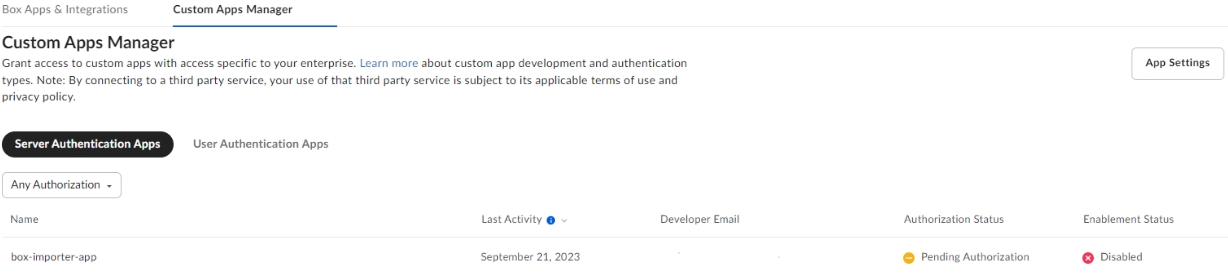

Box Importer

Create folders and metadata (#68813)

Optimize / reduce the number of REST API calls (#68775)

Validate dropdown values before submitting the request to Box (#68774)

Cara Importer

Add support for Cara 5.9 (#68919)

Add support for setting sequence_no (#68777)

Partially import the documents that fails because of invalid object references (#68905)

Exchange Scanner

Add support for Exchange 365 (#68960)

OpenText Content Server Importer

Add support for importing into OpenText Content Server 23.3 (#68834)

WebClient

Copy rules from migset (#68954)

Filter by Value (#68783)

Reset all Error Objects (#68784)

Database

multivalueRemoveDuplicates incorrect on null / empty values (#69237)

Alfresco Importer

Box Importer

Created and Modified attributes not set on folders (#69471)

Cara Importer

When migrating from a source system in one timezone to a target system in a different timezone, migration-center converts the DateTime values deppending on some factors.

Note that the following connectors do not convert the date values on scan or import: OpenText Content Server connectors and the Database scanner.

DateTime values are converted when an object is scanned from source or imported into target based on the Timezone set on the Jobserver machine (Windows or Linux).

(the SharePoint scanner is a special case and has a dedicated section in this article)

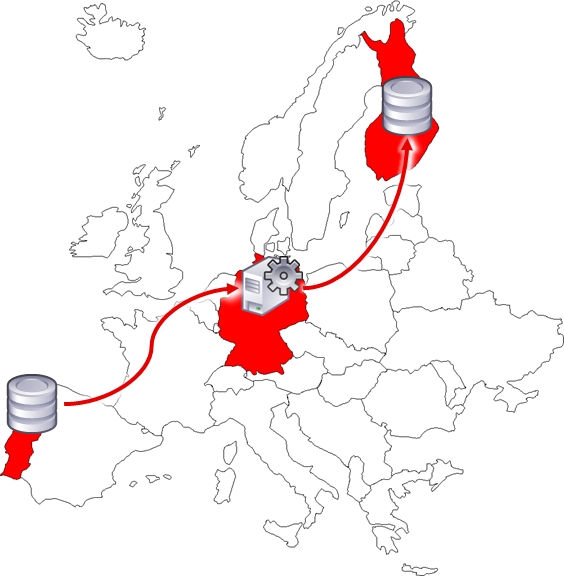

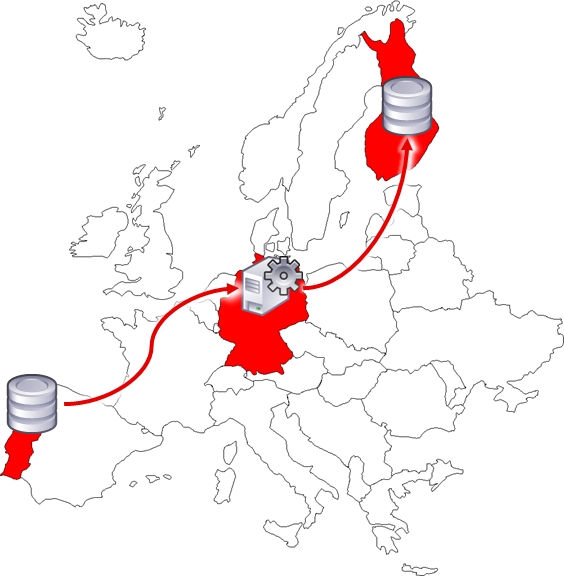

The Source system is located in Portugal, West European Time zone WET (=UTC). The migration-center's job server is located in Germany, Central European Time zone CET (=UTC+1). The Target system is located in Finland, Eastern European Time zone EET (=UTC+2).

A Documentum scanner would convert the WET timezone into CET timezone and store the values in the MC database. (adds 1 hour) A Documentum importer would convert the CET values from the database into EET values before saving them in the target system. (adds 1 hour)

Different job servers are used for scanning and importing and they are located in different time zones. If this is the case, please ensure that all job servers used in that particular migration have the same time zone setting.

Either source or target system is OpenText Content Server or database. In this case, please set the job server's time zone to the time zone of the OpenText or database system.

Source and target systems are OpenText Content Server or database, i.e. migration from database to OTCS or from OTCS to OTCS. Unfortunately, this case is currently not supported by migration-center. Please contact our product support to discuss possible solutions for this case.

The SharePoint scanner consists of two parts: a WSP part that is installed on the SharePoint server and a Java part that is in the migration-center Jobserver.

The WSP part will read date time values with the time zone settings in SharePoint's regional settings, for example West European Time in the example above. Unfortunately, the Java part of the SharePoint scanner always expects date time values in UTC. Therefore the time zone on the job server must be set to Coordinated Universal Time (UTC). This will ensure that the scanner saves the correct date time values in the migration-center database.

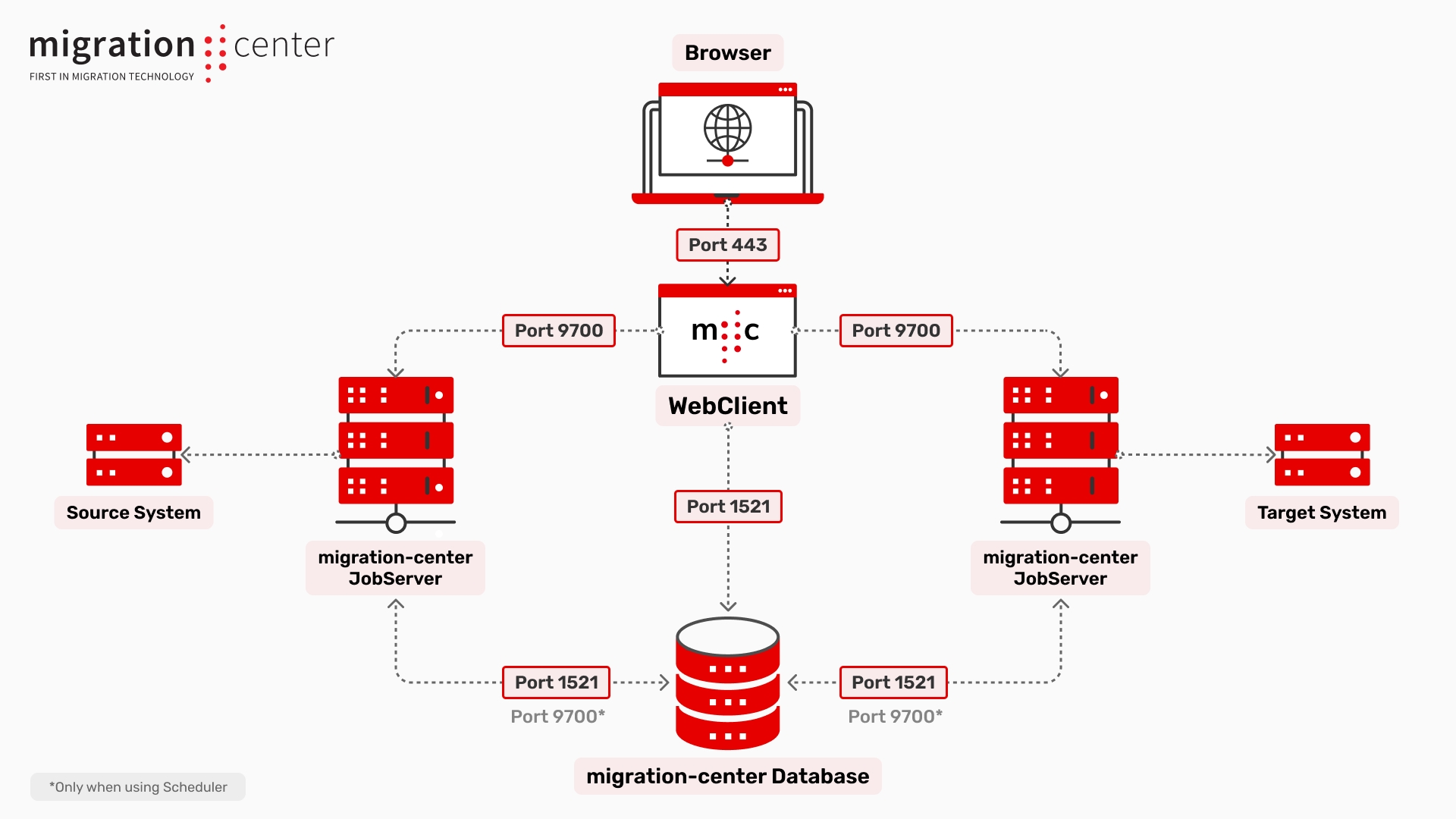

A content migration with migration-center always involves a source system, a target system, and of course the migration-center itself.

This section provides information about the requirements for using migration-center in a content migration project.

For best results and performance it is recommended that every component will be installed on a different system. Hence, the system requirements for each component are listed separately.

The required capacities depend on the number of documents to be processed. The values given below are minimal requirements for a system processing about 1.000.000 documents. Please see the for more details.

The Documentum NCC (No Content Copy) Scanner is a special variant of the regular Documentum Scanner. It offers the same features as regular with the difference that the content of the documents is not exported from Documentum during migration. The content files themselves can be attached to the migrated documents in the target repository by using one the following method:

copy files from the source storage to the target storage outside of migration-center

The Outlook scanner can extract messages from an Outlook mailbox and use it as input into migration-center, from where it can be processed and migrated to other system supported by the various mc importers.

The Microsoft Outlook Scanner currently supports Microsoft Outlook 2007 and 2010 and uses the Moyosoft Java Outlook Connector API to access an Outlook mailbox and extract emails including attachments and properties.

Sets blob index tags

Migset "add new association" entry UI re-design (#68782)

Transformation rules clipboard can be pasted any number of times (#68785)

Remove dependency on ENV variables in REST API (#68642)

Improve Object Type section performance (#69074)

Improve Object Type stability during CRUD operations (#68781)

Improve UI performance of Adapters input (#68655)

Box Importer

Object Type name is being sent in all lowercase characters (#68978)

Cara Importer

First line in relation_config.properties is ignored (#68907)

Empty lines when logging level is 2 (#68916)

Cara file path too long error (#67864)

Setting an ID field which is binded to the LATEST version fails (#67060)

Indexing documents is applied when indexDocuments is not checked (#68906)

Audittrail attribute_list and attribute_old_list are not migrated (#69106)

Internal attribute “title” is not set (#66927)

Veeva Importer

The character \ is not visible in Veeva (#68811)

Import fails if one attribute value finish with "\" (#68812)

WebClient

Blank page when current connection was deleted in another session (#68988)

Error when filtering migset target or error objects in PostgreSQL (#69621)

Retrieving error objects fails on Postgres when rule is associated in two objects types (#69511)

Migset mapping lists incorrectly shown in new migsets after importing migset from file (#69014)

Add association button enables when switching between object types (#64272)

Copying a mapping list duplicates all entries after clicking on an entry and then away (#69228)

Previous step not correctly displayed in IF function (#69163)

WebClient

Refreshing source objects after filtering value on column with upper case letters fails (#69629)

Object filtering fails on Postgres if value contains backslash "\" (#69575)

Function with attribute that no longer exists does not change when setting first entry of new existing attribute list (#69713)

attach the source storage to the target repository so the content will be accessed from the original storage.

The scenario for such migrations usually involves migrations with very large numbers of documents (>>10.000.000), where extracting and transferring the content between the source and target systems would take too much time; thus the approach where only the metadata and content references are migrated is preferred. The actual content is then transferred independently using fast, low overhead file system level access directly in the SAN without having to pass through the API of the source system, migration-center, and then again through the target system’s API as would be the case during a standard Documentum migration. Since the references to the content files are preserved, simply dropping the actual content in place in the respective filestore(s) completes the migration and requires no additional tasks to be performed to link the content to the recently migrated objects. This approach is of course not universally applicable to any Documentum migration project, and needs to be considered and planned beforehand if intended to be used for a given migration.

The Documentum Scanner currently supports Documentum Content Server versions 6.5 to 20.2, including service packs.

For accessing a Documentum repository Documentum Foundation Classes 6.5 or newer is required. Any combinations of DFC versions and Content Server versions supported by EMC Documentum are also supported by migration-center’s Documentum Scanner, but it is recommended to use the DFC version matching the version of the Content Server being scanned. The DFC must be installed and configured on every machine where migration-center Server Components is deployed.

When scanning Documentum documents with the Documentum No Content Copy (NCC) scanner, content files are not exported to the file system anymore, nor are they being imported to the target system. Instead the scanner exports information about each dmr_content object from the source system and saves this as additional, internal information related to the document objects in the migration-center database. The Documentum No Content Copy(NCC) Importer is able to process the content related information and restore the references to the content files within the specified filestore(s) upon import. Copying, moving, or linking the folders containing the content files to the new filestore(s) of the target system is all that’s required to restore the connection between the migrated objects and their content files. Please consult the Documentum Content Server Administration Guide for your Content Server version to learn about filestores and their structure in order to understand how the content files are to be moved between source and target systems.

The Documentum specific features supported by Documentum NCC scanner are fully described in the Documentum Scanner section.

Category

Requirements

RAM

4 GB - 8 GB of memory for migration-center database instance

Hard disk storage space

Depends on the number of documents to migrate roughly 2GB for every 100.000 documents.

CPU

2-4 cores, min. 2.5 GHz (corresponding to the requirements of the Oracle database)

Oracle version

11g Release 2, 12c Release 1, 12c Release 2, 18c, 19c, 21c

Oracle Editions

Standard Edition One, Standard Edition, Enterprise Edition, Express Edition Note: Express Edition is fully supported but not recommended in production because of its limitations.

Category

Requirements

RAM

4 GB - 8 GB of memory for the PostgreSQL server

Hard disk storage space

Depends on the number of documents to migrate roughly 2GB for every 100.000 documents

CPU

4 cores

PostgreSQL version

15.4

Operating system

All OS supported by PostgreSQL

Category

Requirements

RAM

By default, the Job Server is configured to use 1 GB of memory. This will be enough in most of the cases. In some special cases (multiple big scanner/importer batches) it can be configured to use more RAM.

Hard disk storage space (logs)

1GB + storage space for log files (~50 MB for 100,000 files)

Hard disk storage space (objects scanned from source system)

Variable. Refers to temporary storage space required for scanned objects. Can be allocated on a different machine in LAN.

CPU

2-4 Cores, min. 2.5 GHz

Operating system

Windows Server 2012, 2016, 2019 Windows 10

Linux

Category

Requirements

RAM

4 GB (for the WebClient)

Hard disk storage space

200 MB

CPU

min 1 core

Operating system

Windows Server 2016, 2019

Windows 10

Java runtime

Oracle/OpenJDK JRE 8 64-bits

Oracle/OpenJDK JRE 11 64-bits

Category

Requirements

RAM

8 GB (1 GB for MC client)

Hard disk storage space

10 MB

CPU

min 1 core

Operating system

Windows Server 2012, 2016, 2019

Windows 10

Database software

32 bit client of Oracle 11g Release 2 (or above)

or 32 bit Oracle Instant Client for 11g Release 2 (or above)

The Outlook scanner does not work with Java 11. Please use Java 8 for the Jobserver that will run the scanner.

To create a new Outlook Scanner job, specify the respective adapter type in the Scanner Properties window – from the list of available connectors, “Outlook” must be selected. Once the adapter type has been selected, the Parameters list will be populated with the parameters specific to the selected adapter type, in this case the Outlook connector’s.

The Properties window of a scanner can be accessed by double-clicking a scanner in the list, or selecting the Properties button or entry from the toolbar or context menu.

The common adaptor parameters are described in Common Parameters.

The configuration parameters available for the Outlook Scanner are described below:

scanFolderPaths* Outlook folder paths to scan.

The syntax is \\<accountname>[\folder path]. The account name at least must be specified. Folders are optional (specifying nothing but an account name would scan the entire mailbox, including all subfolders). Multiple paths can be entered by separating them with the “|” character.

Example: \\user@domain\Inbox would scan the Inbox of user@domain (including subfolders)

excludeFolderPaths Outlook folder paths to exclude from scanning. Follows the same syntax as scanFolderPaths above.

Example: \\user@domain\Inbox\Personal would exclude user@domain’s personal mails stored in the Personal subfolder of the Inbox if used in conjunction with the above example for scanFolderPaths.

ignoredAttributesList A comma separated list of Outlook properties to be ignored by the scanner.

At least Body,HTMLBody,RTFBody,PermissionTemplateGuid should be always excluded as these significantly increase the size of the information retrieved from Outlook but don’t provide any information useful for migration purposes in return

exportLocation* Folder path. The location where the exported object content should be temporary saved. It can be a local folder on the same machine with the Job Server or a shared folder on the network. This folder must exist prior to launching the scanner and must have write permissions. migration-center will not create this folder automatically. If the folder cannot be found an appropriate error will be raised and logged. This path must be accessible by both scanner and importer so if they are running on different machines, it should be a shared network folder.

loggingLevel* See: .

Parameters marked with an asterisk (*) are mandatory.

The Outlook scanner connects to a specified Outlook mail account and can extract messages from one (or multiple) folder(s) existing within that accounts mailbox. All subfolders of the specified folder(s) will automatically be processed as well; an option for excluding select subfolders from scanning is also available. See chapter Outlook scanner parameters below for more information about the features and configuration parameters available in the Outlook scanner.

In addition to the emails themselves, attachments and properties of the respective messages are also extracted. The messages and included attachments are stored as .msg files on disk, while the properties are written to the mc database, as is the standard with all migration-center scanners.

After a scan has completed, the newly scanned email messages and their properties are available for further processing in migration-center.

Source System Date

Date saved in MC database

Target System Date

12.06.2017 15:00:00 WET

12.06.2017 16:00:00 CET

12.06.2017 17:00:00 EET

11.06.2017 23:00:00 WET

12.06.2017 00:00:00 CET

12.06.2017 01:00:00 EET

The AzureBlob importer takes files processed in migration-center and imports them into the target Azure Blob containers.

You will need the Azure Storage EndPoint and a SAS Token to connect the importer to Azure.

A standard Azure Storage Endpoint includes the unique storage account name along with a fixed domain name. The format of a standard endpoint is:

https://<storage-account>.blob.core.windows.net

For more information about the Azure Endpoints see:

To generate the SAS Token follow the Azure documentation here:

sasToken The SAS Token used to connect to Azure. See .

cache_control The Azure document's cache control header. Used to manage the expiration of blob storage in Azure CDN. See:

containerName The name of the Azure container where the document will be imported

content_disposition The content disposition attribute of the Azure document. The Content-Disposition response header field conveys additional information about how to process the response payload. See:

You can set Azure Metadata attributes by adding attributes to the Object Type you will be using in the migset. The Object Type name is not relevant.

Any attribute present in the Object Type and associated in the migset will be set on your imported documents.

You can set Blob Index Tags by adding them to the Object Type and associating them in the migset, just like a regular Metadata attribute.

The OpenText InPlace importer takes the objects processed in migration-center and imports them back in an OpenText repository. OpenText InPlace importer works together only with OpenText scanner.

OpenText InPlace adaptor supports a limited amount of OpenText features, specifically changing documents categories and category attributes.

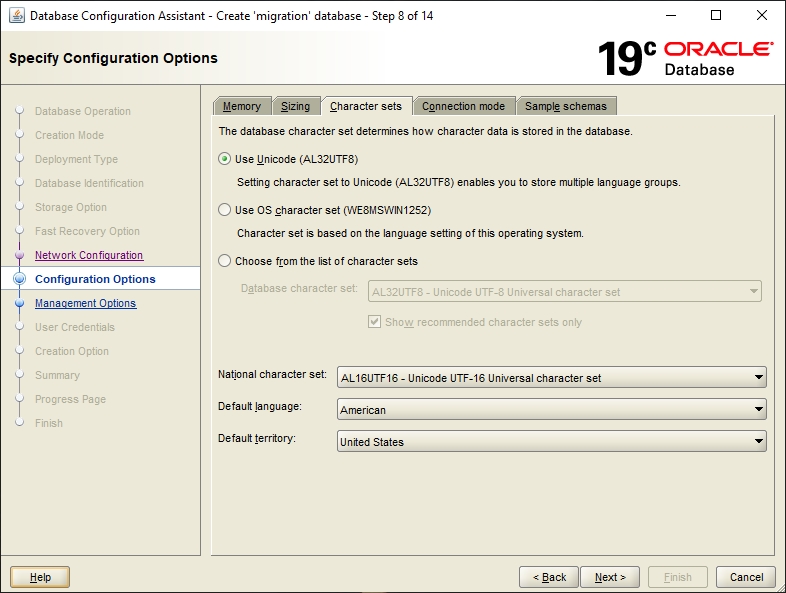

Oracle instance

Character set: AL32UTF8 Necessary database components: Oracle XML DB

Operating system

All OS supported by Oracle

Java runtime

Oracle/OpenJDK JRE 8, Oracle/OpenJDK JRE 11

32 or 64 bit

Browser

Chrome or Edge

loggingLevel See Common Parameters.

content_language The content language attribute of the Azure document.

content_type The content type attribute of the Azure document. Sets the content file MIME Type.

fileName The file name of the document being imported.

folder_path The Azure folder path where the document will be created

mc_content_location The content location of document. If not set, the content will correspond with the source object content location

target_type The target type representing the AzureBlob document type.

OpenText InPlace is compatible with the version 10.5, 16.0, 16.4 and 20.2 of OpenText Content Server.

It does requires Content Web Services to be installed on the Content Server. In case of setting classifications to the imported files or folders the Classification Webservice must be installed on the Content Server. For supporting Record Management Classifications the Record Management Webservice is required.

To create a new OpenText InPlace Importer job specify the respective adapter type in the importer’s Properties window – from the list of available connectors, “OpenText InPlace” must be selected. Once the adapter type has been selected, the Parameters list will be populated with the parameters specific to the selected adapter type, in this case, OpenText InPlace.

The Properties window of an importer can be accessed by double-clicking an importer in the list, by selecting the Properties button from the toolbar or from the context menu.

The common adaptor parameters are described in Common Parameters.

The configuration parameters available for the OTCS InPlace importer are described below:

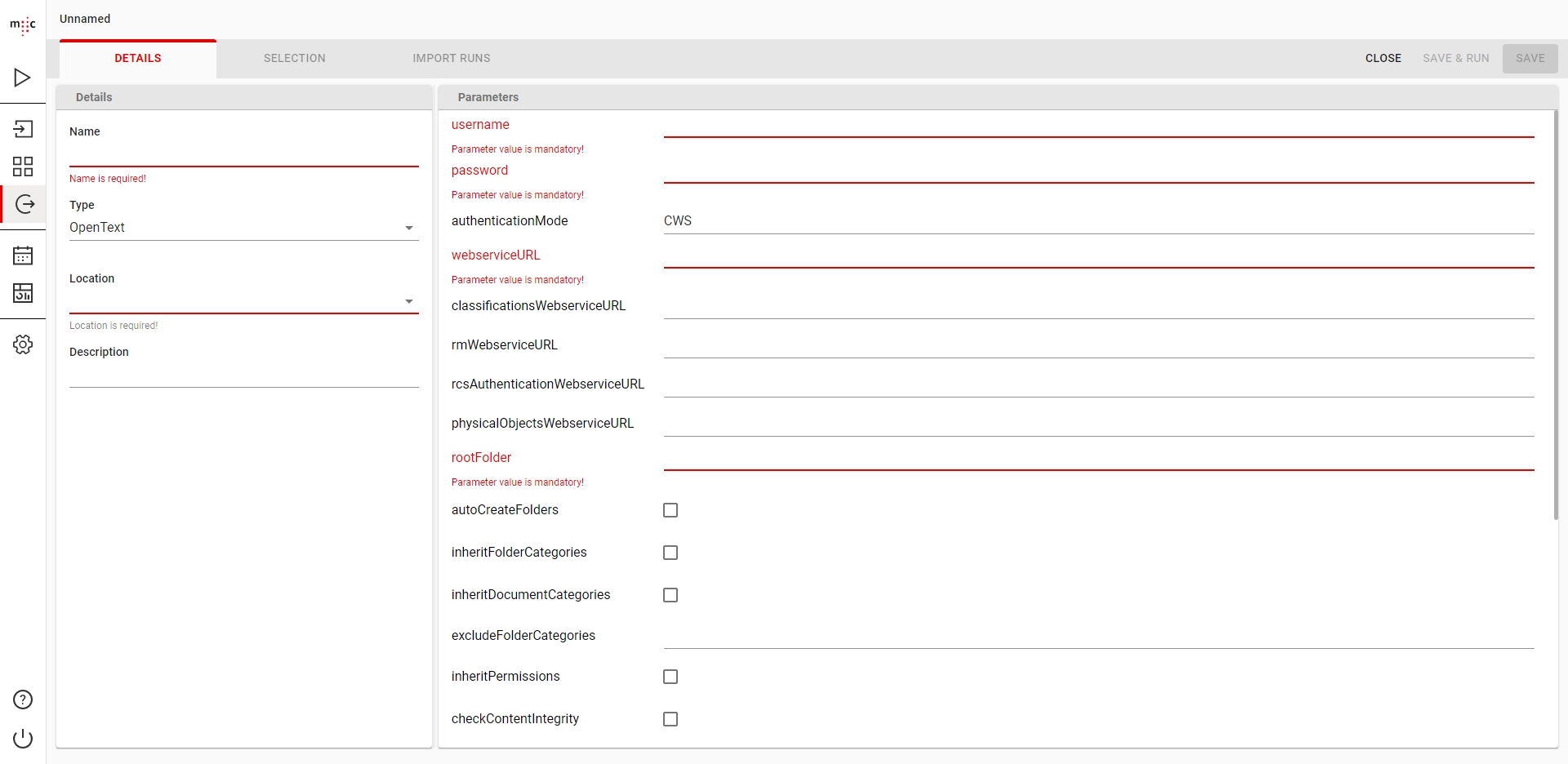

username* User name for connecting to the target repository. A user account with super user privileges must be used to support the full OpenText functionality offered by migration-center.

password* The user’s password.

authenticationMode* The OpenText Content Server authentication mode. Valid values are: CWS for regular Content Server authentication RCS for authentication of OpenText Runtime and Core Services RCSCAP for authentication via Common Authentication Protocol over Runtime and Core Services Note: If this version of OpenText Content Server Import Adaptor is used together with together with “Extended ECM for SAP Solutions”, then ‘authenticationmode’ has to be set to “RCS”, since OpenText Content Server together with “Extended ECM for SAP Solutions” is deployed under “Runtime and Core Services”. For details of the individual authentication mechanisms and scenarios provided by OpenText, see appropriate documentation at OpenText KnowledgeCenter.

webserviceURL* The URL to the Authentication service of the “les-services”: Ex: http://server:port/les-services/services/Authentication

rootFolder* The id of node under the documents will be imported. Ex. 2000

overwriteExistingCategories When checked the attributes of the existing category will be overwritten with the specified values. If not checked, the existing categories will be deleted before the specified categories will be added.

numberOfThreads The number threads that will be used for importing objects. Maximum allowed is 20.

loggingLevel* See .

Parameters marked with an asterisk (*) are mandatory.

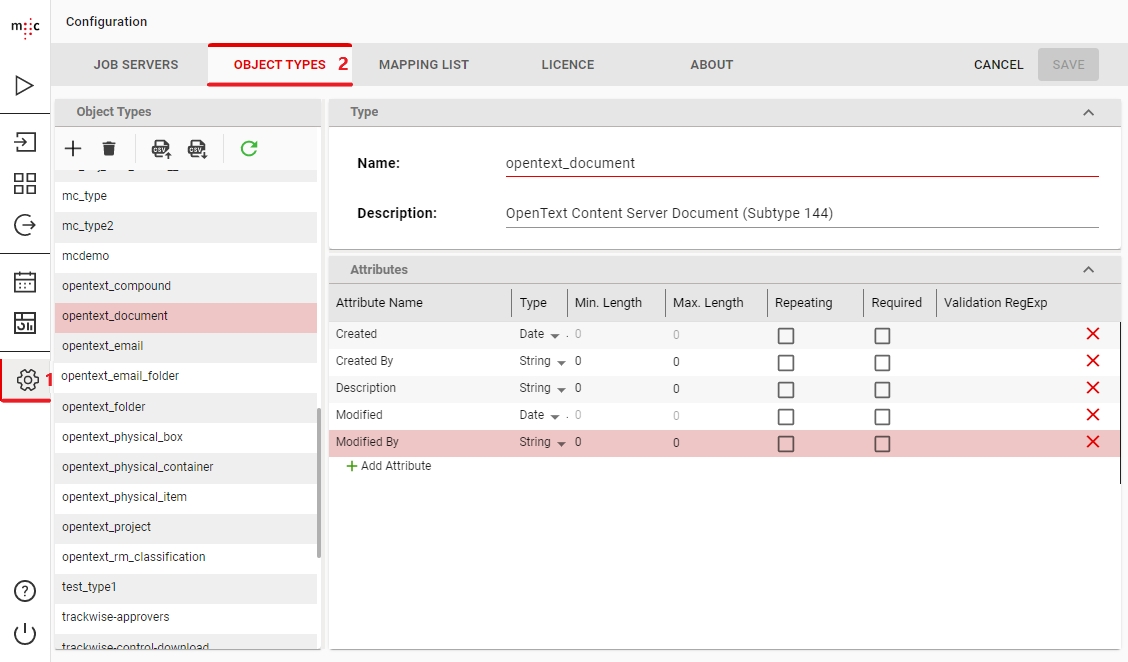

OpenText InPlace importer allows assigning categories to the imported documents and folders. A category is handled internally by migration center client as target object type and therefore the categories has to be defined in the migration-center client in the object types window ( <Manage> <Object types> ).

Since multiple categories with the same name can exist in an OpenText repository the category name must be always followed by its internal id. Ex: BankCustomer-44632.

The sets defined in the OpenText categories are supported by migration-center. The set attributes will be defined within the corresponding object type using the pattern <Set Name>#<Attribute Name>. The importer will recognize the attributes containing the separator “#” to be attributes belonging to the named Set and it will import them accordingly.

Only the categories specified in the system rules “target_type” will be assigned to the imported objects.

For setting the category attributes the rules must be associated with the category attributes in the migration set’s |Associations| tab.

Since version 3.2.9 table key lookup attributes are supported in the categories. This attributes should be defined in migration-center in the same way the other attributes for categories are defined. Supported type of table key lookup attributes are Varchar, Number and Date. The only limitation is that Date type attributes should be of type String in the migration-center Object types.

If the importer parameter overwriteExistingCategories is checked, only the specified category and category attributes associated in the migset will be updated when importing, leaving the rest of the categories the same as they were before the import.

If left unchecked, the categories and category attributes associated in the migset will be updated but any unspecified category in the migset will be removed from the document.

The Documentum NCC (No Content Copy) Importer is a special variant of the regular Documentum Importer. It offers the same features as regular Documentum Importer with the difference that the content of the documents is not imported to Documentum during migration. The content files themselves can be attached to the migrated documents in the target repository by using one the following methods:

copy files from the source storage to the target storage outside of migration-center

attach the source storage to the target repository so the content will be access from the original storage.

Delta migration for the multi-page content does not work properly when a new page is added to the primary content (#55739)

Documentum NCC adapter does not work when migration-center is running on a Postgres database.

The Documentum Scanner currently supports Documentum Content Server versions 6.5 to 20.2, including service packs.

For accessing a Documentum repository Documentum Foundation Classes 6.5 or newer is required. Any combinations of DFC versions and Content Server versions supported by EMC Documentum are also supported by migration-center’s Documentum Scanner, but it is recommended to use the DFC version matching the version of the Content Server being scanned. The DFC must be installed and configured on every machine where migration-center Server Components is deployed.

When documents to be migrated are located in a Content Addressable Storage (CAS) like Centera or ECS some additional steps for deployment and configurations are required.

Create the centera.config file in the folder .\lib\mc-dctm-adaptor. The file must contain the following line: PEA_CONFIG = cas.ecstestdrive.com?path=C:/centera/ecs_testdrive.pea Note: Set the storage IP or machine name and the local path to the PEA file.

Copy Centera SDK jar files in .\lib\mc-dctm-adaptor folder

Copy Centera SDK dlls in folder that is set in the path variable. Ex: C:\Program Files\Documentum\Shared

Documentum NCC importer works in combination with . The scanner does not export the content from the source repository but it exports the dmr_content objects associated with the documents and stores them in migration center database as relations of type "ContentRelation". The importer will use the information in the exported dmr_content for creating the corresponding dmr_content in the target repository in such such a way that it points to the content in the original filestore.

Documentum NCC importer supports all the features of the standard but the system rules related to the content behave differently as is described below. Also the some dm_document attributes are now mandatory.

Primary content attribute

a_content_type It must be set with the format of the content in the target repository. Leave it empty for the documents that don't have a content.

a_storage_type It must be set with the name of the storage in the target repository where the document will imported. The target storage must be storage that points to filestore where the document was located in the source repository. Leave it empty for the documents that don't have a content.

Rendition system attribute

dctm_obj_rendition It has to be set with the r_object_id of the dmr_content objects scanned from source repository. The required values are provided in the source attribute with the same name.

dctm_obj_rendition_format For every value in dctm_obj_rendition, a rendition format must be specified in this rule. The formats specified in this attribute must be valid formats in the target repository.

dctm_obj_rendition_modifier Specify a page modifier to be set for the rendition. Any string can be set (must conform to Documentum’s page_modifier attribute, as that’s where the value would end up) Leave empty if you don’t want to set any page modifiers for renditions. If not set, the importer will not set any page modifier

All renditions scanned from the source repository must be imported in the target repository. If dctm_obj_rendition will be set with fewer or more values than the renditions scanned from the source repository the object will fail to import.

In addition to standard parameters inherited from some specific parameters are provided in the Documentum NCC importer.

maxSizeEmbeddedFileKB Content Addressable Storage (CAS) allows small content to be saved embedded. Set the max size of the content that can be stored embedded. Max allowed values is 100 (KB). If If 0 is set, no embedded content is saved.

tempStorageName The name of the temporary storage where a dummy content will be created during the migration. For creating dmr_content objects the importer needs to create a temporary dummy content (files of 0 KB) that will be stored in the storage specified in this parameter. This storage should be deleted after the migration is done.

This kind of migration requires some settings to be done on the target repositories at the end of the migration.

As it was described above, the importer creates temporary content during migration. This content is not required to be kept after the migration and therefore the storage set in the parameter "tempStorageName" can be deleted from the target repository.

This section applies only when content is located in a file storage. In case of using a Content Addressable Storage updating data ticked sequence is not applicable.

After documents are imported to your new Documentum there will be a mismatch of the data ticket offset between your new Documentum file store and the Documentum Content Server Cache. The Documentum NCC importer is delivered with a tool fixing this offset. You can find this tool in your Server Components Installations folder under "\Tools\mc-fix-data-ticket-sequence". Running this start.bat-file opens a graphical user interface with a dialog to select the repository, which contains imported documents, and provide credentials to connect to it. After a successful login the tool lists available Documentum file stores that may require an action to fix the data sequence. If the value “offset” of a file store is less than 0 your action is required to update the data ticket sequence of this file store. The column “action” informs you to take action as well. If you want to update the data ticket sequence of a file store, you must select the file store and press the button “Update data ticket sequence” at the bottom of the tool. After a successful update of the data ticket sequence you have to restart your repository via the Documentum Server Manager.

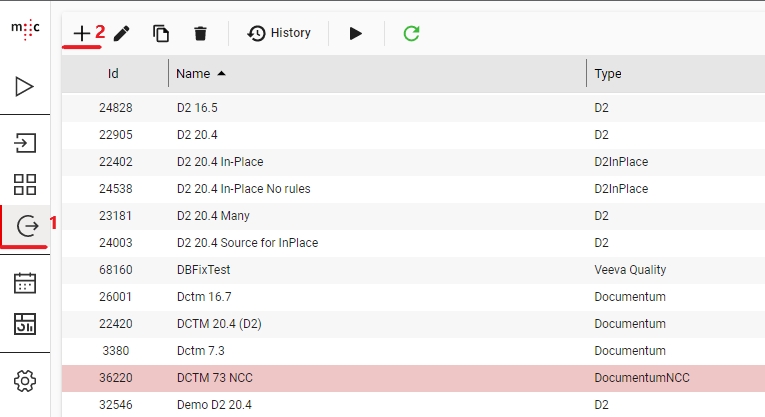

The D2 InPlace connector takes the objects processed in migration-center and imports them back in a Documentum or D2 repository. The D2 InPlace connector extends the Documentum InPlace connector and it works together only with Documentum scanner.

The D2 InPlace connector supports a limited amount of D2 features besides the ones already available in the Documentum InPlace connector such as applying auto-security functionality, auto-linking functionality, auto-naming functionality and validating the values against a property page. All these D2 features can be applied based on the owner user or the migration user.

The D2 InPlace currently supports the following D2 versions: 16.5, 16.6, 20.2, 20.4.

The supported D2 Content Server versions are 20.2, 20.4, including service packs. Any combinations of DFC versions and Content Server versions supported by Opentext Documentum are also supported by migration-center’s D2 InPlace Importer, but it is recommended to use the DFC version matching the version of the Content Server targeted for import. The DFC must be installed and configured on every machine where migration-center Server Components is deployed.

The D2 inPlace connector supports all the regular DCTM based features supported by the DCTM inPlace connector. Please refer to the for details.

To create a new connector, select "D2InPlace" in the adapter Type drop down of the importer. After this, the list below will be filled with the specific D2InPlace parameters.

The Properties window of an importer can be accessed by double-clicking an importer in the list, by selecting the Properties button from the toolbar or from the context menu.

The common adaptor parameters are described in .

The configuration parameters available for the Alfresco importer are described below:

username* Username for connecting to the target repository. A user account with super user privileges must be used to support the full D2/Documentum functionality offered by migration-center.

password* The user’s password.

repository* Name of the target repository. The target repository must be accessible from the machine where the selected Job Server is running.

moveContentOnly

Parameters marked with an asterisk (*) are mandatory.

The Filesystem Importer can save objects from migration-center to the file system. It can also write metadata for those objects into either separate or a unified XML file. The folder structure (if any) can also be created in the filesystem during import. The filesystem can be either local filesystem or a share accessible via a UNC path.

dctm_obj_rendition_page Specify the page number of every rendition in rule dctm_obj_rendition. If not set, the page number 0 will be set for all renditions

dctm_obj_rendition_storage Specify a valid Documentum filestore for every rendition set in the rule dctm_obj_rendition. It must have the same number of values as dcmt_obj_rendition attribute.

autoCreateFolders This option will be used for letting the importer automatically create any missing folders that are part of “dctm_obj_link” or “r_folder_path”. Use this option to have migration-center re-create a folder structure at the target repository during import. If the target repository already has a fixed/predefined folder structure and creating new folders is not desired, deselect this option

defaultFolderType The Documentum folder type name used when automatically creating the missing object links. If left empty, dm_folder will be used as default type.

moveRenditionContent Flag indicating if renditions will be moved to the new storage. If checked, all renditions and primary content are moved otherwise only the primary content is moved.

moveCheckoutContent Flag indicating if checkout documents will be moved to new storage. If not checked, the importer will throw an error if a document is checked out.

removeOriginalContent Flag indicating if the content will be removed from the original storage. If checked, the content is removed from the original storage, otherwise the content remains there.

moveContentLogFile The file path on the content server where the log related to move content operations will be saved. The folder must exist on the content server. If it does not exist, the log will not be created at all. A value must be set when move content feature is activated by the setting of attribute “a_storage_type”.

applyD2Autonaming Enable or disable D2’s auto-naming rules on import. See Autonaming for more details.

applyD2Autolinking Enable or disable D2’s auto-linking rules on import. See Autolinking for more details.

applyD2AutoSecurity Enable or disable D2’s auto-security rules on import. See Security for more details.

applyD2RulesByOwner Apply D2 rules based on the current document’s owner, rather than the user configured to run the import process. See Applying D2 rules based on a document’s owner for more details.

numberOfThreads The number threads that will be used for importing objects. Maximum allowed is 20.

loggingLevel* See Common Parameters.

The scanner uses FTP/S to download the content of the documents and their versions from the Veeva Vault environment to the selected export location. This means the content will be exported first from the Veeva Vault to the Veeva FTP server and the Veeva scanner will then download the content files via FTP/S from the Veeva FTP server. So, the necessary outbound ports (i.e. TCP 21 and TCP 56000 – 56100) should be opened in your firewalls as described here:

http://vaulthelp.vod309.com/wordpress/admin-user-help/admin-basics/accessing-your-vaults-ftp-server/

To create a new Veeva Scanner job click on the New Scanner button and select “Veeva” from the adapter type dropdown list. Once the adapter type has been selected, the parameters list will be populated with the Veeva Scanner parameters.

The Properties of an existing scanner can be accessed after creating the scanner by double-clicking the scanner in the list or by selecting the Properties button/menu item from the toolbar/context menu. A description is always displayed at the bottom of the window for the selected parameter.

Multiple scanners can be created for scanning different locations, provided each scanner has a unique name.

The common adaptor parameters are described in Common Parameters.

The configuration parameters available for the Veeva Scanner are described below:

username* Veeva username. It must be a Vault Owner.

password* The user’s password.

server* Veeva Vault fully qualified domain name. Ex: fme-clinical.veevavault.com

proxyServer The name or IP of the proxy server if there is any.

proxyPort The port for the proxy server.

proxyUser The username if required by the proxy server.

proxyPassword The password if required by the proxy server.

documentSelection The parameter contains the conditions, which will be used in the WHERE statement of the VQL. The Vault will validate the conditions. If the string comprises several conditions, you must ensure the intended order between the logical operators. If the parameter is empty, then the entire Veeva Vault will be scanned.

Examples:

type__v=’Your Type’

classification__v=’SOP’

product__v=’00P000000000201’ OR product__v=’00P000000000202’

(study__v=’0ST000000000501’ OR study__v=’0ST000000000503’) AND blinding__v=’Blinded’

batchSize* The batch size representing how many documents to be loaded in a single bulk operation.

scanBinders The flag indicates if the binders should be scanned or not. This parameter will scan just the latest version of each version tree.

scanBinderVersions Flag indicating if all the versions should be scanned or not. If it is checked, every version will be scanned.

maintainBinderIntegrity The flag indicates if the scanner must maintain the binder integrity or not. If it is scanned, all the children will be exported to keep consistency.

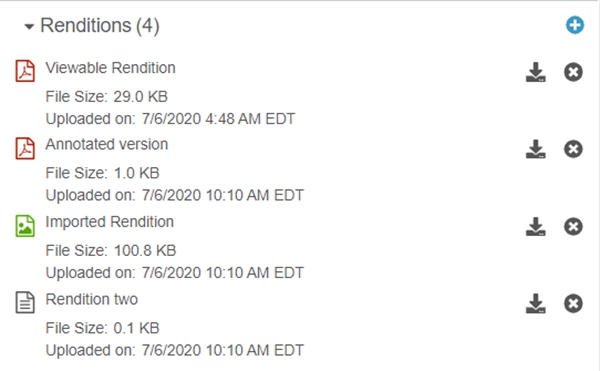

exportRenditions This checkbox parameter indicates if the document renditions will be scanned or not.

renditionTypes This parameter indicates which types of renditions will be scanned. If the value is left empty, all the types will be exported. This parameter is repeating and is used just if the exportRenditions is checked.

Examples:

viewable_rendition__v

custom_rendition__c

exportSignaturePages The flag indicates if the Signature Page should be added to the viewable rendition. If checked, the scanner will export the viewable rendition of the documents - when it is in the scope of rendition export - including any eSignature pages. If uncheck, the scanner will export the viewable rendition of the documents - when it is in the scope of rendition export - without any eSignature pages.

exportCrosslinkDocuments This checkbox indicates if the cross-link documents should be scanned or not.

scanAuditTrails This checkbox parameter indicates if the audit trail records of the scanned documents will be scanned as a separate MC object. The audit trail object will have ‘Source_type’ attribute set to Veeva(audittrail) and the ‘audited_obj_id’ will contain the source id of the audited document.

enableDeltaForAuditTrails Flag indicating if the new audit trails should be detected during delta scan even the document was not changed.

skipFtpContentDownload This flag indicates if the content files should be transferred from the FTP staging server. If selected, the contents will remain on the staging server and the scanner will store their FTP paths.

The FTP paths have the following OOTB pattern:

ftp:/u<user-id>/<veeva-job-id>/<doc-id>/<version-identifier>/<doc-name>

Examples:

ftp:/u6236635/23625/423651/2_1/monthly-bill.pdf

useHTTPSContentTransfer This flag indicates if the content files should be transferred from the Veeva Vault to the export location via the REST endpoint. When this box is checked, the scanner will download every content file sequentially via HTTPS.

Note: This feature is much slower, but it will not involve any FTP connection.

downloadFtpContentUsingRest Flag indicating if the content from Staging Folder will be downloaded by using REST API.

This is the recommended way to download the content locally.

Note: Connection to FTPS server is not made so the FTPS ports don't need to be opened.

exportLocation* Folder path. The location where the exported object content should be temporarily saved. It can be a local folder on the same machine with the Job Server or a shared folder on the network. This folder must exist prior to launching the scanner and the user account running the Job Server must have write permission on the folder. migration-center will not create this folder automatically. If the folder does not exist, an appropriate error will be raised and logged. This path must be accessible by both scanner and importer so if they are running on different machines, it should be a shared folder.

ftpMaxNrOfThreads* Maximum number of concurrent threads that Maximum number of concurrent threads that will be used to transfer content from FTP server to local system. The max value allowed by the scanner is 20 but according with Veeva migration best practices it is strongly recommended to use maximum 5 threads.

loggingLevel*

See: .

Parameters marked with an asterisk (*) are mandatory.

There is a configuration file for additional settings for the Veeva Scanner located under the …/lib/mc-veeva-scanner/ folder in the Job Server install location. It has the following properties that can be set:

request_timeout The time in milliseconds the scanner waits for a response after every API call. Default: 600000 ms

client_id_prefix This is the prefix of the Client ID that is passed to every Vault API call. The Client ID is always logged in the report log. For a better identification of the requests (if necessary) the default value should be changed to include the name of the company as described here: https://developer.veevavault.com/docs/#client-id Default: fme-vault-client-migrationcenter

no_of_request_attempts Represents the number of request attempts when the first call fails due to a server error(5xx error code). The REST API call will be executed at least once independent of the parameter value. Default: 2

The Veeva Scanner connects to the Veeva Vault by using the username, password & server name from the configuration. The FTP/S connection is done internally by following the standard instructions computing the FTP username from the server and the user. Additionally, to use the proxy functionality, you have to provide a proxyUser, proxyPassword, proxyPort & proxyServer.

The scanner can export all the versions of a document, as a version tree, together with their rendition files and audit trails if they exist. The scanner will export the documents in batches of provided size using the condition specified in the configuration.

The Veeva Scanner uses a VQL query to determine the documents to be scanned. By leaving the documentSelection parameter empty, the scanner will export all available documents from the entire Vault.

A Crosslink is a document created in one Vault that uses the viewable rendition of another document in another Vault as its source document.

For scanning the crosslink it is necessary to set the exportCrossLinkDocuments checkbox from the scanner configuration. The scanned crosslink object will have isCrosslink attribute set to true and this is how you can separate the documents and crosslinks from each other.

The scanner configuration view contains the exportRenditions parameter, which let you export the renditions. Moreover, you can specify exactly, which rendition files to be exported by specifying the desired types in the renditionTypes parameter.

If the exportRenditions parameter is checked and the ‘renditionTypes parameter contains annotated_version__c, rendition_two__c values, then just these two renditions will be exported.

We are strongly recommending you to use a separate user for the migration project, because the Veeva Vault will generate a new audit trail record for every document for every action (extracting metadata, downloading the document content, etc.) made during the scanning process.

Veeva Scanner allows scanning audit trails for every scanned document as a distinct MC source object. The audit trails will be scanned as ‘Veeva(audittrail)’ objects if the checkbox scanAuditTrails is set. The audited_obj_id attribute contains the source system id of the document that has this audit trail.

The audit trails can be detected by migration-center during the delta scan even if the audited document was not changed if the checkbox enableDeltaForAuditTrails is set.

The Veeva Scanner allows you to extract the binders in such a way that they can be imported in a OpenText Documentum repository as Virtual Documents. The feature is provided by three parameters: scanBinders, scanBinderVersions, maintainBinderIntegrity. On Veeva Vault side, the binders are managed just as the documents, but having the binder__v attribute set to true.

To fully migrate binders to virtual documents, you have to scan them by maintaining their integrity to be able to rebuild them on Documentum side.

The scanner will create a relationship between the binder and its children. Moreover, the scanner supports scanning of nested binders. The relationship will contain all the information required by the Documentum importer.

To create a new Filesystem Importer job, specify the respective adapter type in the Importer Properties window – from the list of available connectors “Filesystem” must be selected. Once the adapter type has been selected, the Parameters list will be populated with the parameters specific to the selected adapter type.

The Properties window of an importer can be accessed by double-clicking an importer in the list, or selecting the Properties button/menu item from the toolbar/context menu.

A detailed description is always displayed at the bottom of the window for a selected parameter.

The common adaptor parameters are described in Common Parameters.

The configuration parameters available for the Filesystem importer are described below:

xsltPath The path to the XSL file used for transformation of the meta-data (leave empty for default metadata XML output)

unifiedMetadataPath The path and filename where the unified metadata file should be saved; the parent folder must exist, otherwise the import will stop with an error Leave empty to create individual XML metadata files for each object

unifiedMetadataRootNodes The list of XML root nodes to be inserted in the unified meta-data file which will contain the document and folder metadata nodes; the default value is “root”, which will create a … element. Multiple values are also supported, separated by “;”, e.g. “root;metadata”, which would create a … structure in the XML file for storing the object’s metadata.

moveFiles Flag for moving content files. Unchecked - the content files will be just copied Checked - the content files will be moved (copied and then deleted from original location) Default: false

loggingLevel*

See .

Parameters marked with an asterisk (*) are mandatory.

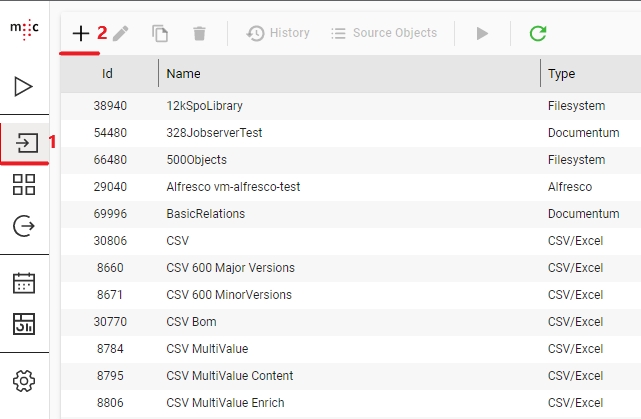

Documents targeted at the filesystem will have to be added to a migration set first. This migration set must be configured to accept objects of type <source object type>ToFilesystem(document).

Create a new migration set and set the <source object type>ToFilesystem(document).object type in the Type drop-down. This is set in the –Migration Set Properties- window which appears when creating a new migration set. The type of object can no longer be changed after a migration set has been created.

content_target_file_path Sets the full path, including filename and extension, where the current document should be saved on import. Use the available transformation methods to build a string representing a valid file system path. If not set, the source content will be ignored by the importer. Example: d:\Migration\Files\My Documents\Report for 12-11.xls

rendition_source_file_paths Sets the full path, including filename and extension, where a “rendition” file for the current document is located. Use the available transformation methods to build a string representing a valid file system path. Example: \server\share\Source Data\Renditions\Report for 12-11.pdf This is a multi-value rule, allowing multiple paths to be specified if more than one rendition exists (PDF, plain text, and XML for example)

rendition_target_file_paths Sets the full path, including filename and extension, where a “rendition” file for the current document should be saved to. Typically this would be somewhere near the main document, but any valid path is acceptable. Use the available transformation methods to build a string representing a valid file system path. Example: d:\Migration\Files\My Documents\Renditions\Report for 12-11.pdf This is a multi-value rule, allowing multiple paths to be specified if more than one rendition exists (PDF, plain text, and XML for example)

metadata_file_path The path to the individual metadata file that will be generated for current object.

created_date Sets the “Created” date attribute in the filesystem.

modified_date Sets the “Modified” date attribute in the filesystem.

file_owner Sets the “Owner” attribute in the filesystem. The user e.g. “domain\user” or “jdoe” must either exist in the computer ‘users’ or in the LDAP-directory.

Since the Filesystem doesn’t use different object types for files, the Filesystem Importer doesn’t need this information either. But due to migration-center’s workflow an association with at least one object type needs to exist in order to validate and prepare objects in a migration set for import.

To work around this, any existing migration-center object type definition can be used with the Filesystem Importer. A good practice would be to create a new object type definition containing the attribute values used with the Filesystem Importer, and to use this object type definition for association and validation.

In addition to the actual content files, metadata files containing the objects attributes can be created when outputting files from migration-center. These files use a simple XML schema and usually should be placed next to the objects they are related to. It is also possible to collect metadata for all objects imported in a given run to a single metadata file, rather than separate files.

Starting with version 3.2.6 the way of creating objects metadata has become more flexible. The following options are available:

Generate the metadata for each object to an individual xml file. The name and the location of the individual metadata file is now configurable through the system rule “metadata_file_path”. If left empty no individual metadata files will be generated.

Generate the metadata of the imported objects in a single xml file. The name and the location of the unified metadata file will be set in the importer parameter “unifiedMetadataPath”. In this case the system rule “metadata_file_path” must be empty.

Generate the metadata for each object to an individual xml file and create also the unified metadata file. The individual metadata file will be set through the system rule “metadata_file_path” and the unified metadata through the importer parameter “unifiedMetadataPath”

Import only the content of files without generating any metadata file. In this case the system rule “metadata_file_path” and the importer parameter “unifiedMetadataPath” should be left empty.

A sample metadata file’s XML structure is illustrated below. The sample content could belong to the report.pdf.fme file mentioned above. In this case the report.pdf file has 4 attributes, each attribute being defined as a name-value pair. There are five lines because one of the attributes is a multi-value attribute. Multi-value attributes are represented by repeating the attribute element with the same name, but different value attribute (i.e. the keywords attribute is listed twice, but with different values)

To generate metadata files in a different format than the one above, an XSL template can be used to transform the above XML into another output. To use this functionality a corresponding XSL file needs to be build and its location specified in the importer’s parameters. This way it is possible to obtain XML files in a format that could be processed further by other software if needed. The specified XSL template will apply to both metadata files: individual and unified.

For a unified metadata file it is also possible to specify the name of the root node (through an importer parameter) that will be used to enclose the individual objects’ <contentattributes> nodes.

Filesystem attributes like created, modified and owner can not only be set in the metadata file but they are also set on the created content file in the operating system. Any source attribute can be used and mapped to one of these attributes in the migset system rules.

Even though the filesystem does not explicitly support “renditions”, i.e. representations of the same file in different formats, the Filesystem importer can work with multiple files which represent different formats of the same content. The Filesystem Importer does not and cannot generate these files – “Renditions” would typically come from an external source such as PDF representations of editable Office file formats or technical drawings created using one of the many PDF generation applications available, or renditions extracted by a migration-center scanner from a system which supports such a feature. If files intended to be used as renditions exist, the Filesystem Importer can be configured to get these files from their current location and move them to the import location together with the migrated documents. The “renditions” can then be renamed for example in order to match the name of the main document they relate to; any other transformation is of course also possible. “Renditions” are an optional feature and can be managed through dedicated system rules during the migration. See for more.

The source data imported with the Filesystem Importer can originate from various content management systems which typically also support multiple versions of the same object.

The Filesystem Importer does not support outputting versioned objects to the filesystem (multiple versions of the same document for example).

This is due to the filesystem’s design which does not support versioning an object, nor creating multiple files in the same folder with the same name. If versions need to be imported using the Filesystem Importer the version information should be appended to the filename attribute to generate unique filenames. This way there will be no conflicting names and the importer will be able to write all files correctly to the location specified by the user.

The source data imported with the Filesystem Importer can originate from various content management systems which can support multiple links for the same object, i.e. one and the same object being accessible in multiple locations.

The Filesystem Importer does not support creating multiple links for objects in the filesystem (the same folder linked to multiple different parent folders for example). If the object to be imported with the Filesystem Importer has had multiple links originally, only the first link will be preserved and used by the Filesystem Importer for creating the respective object. This may put some objects in unexpected locations, depending on how the objects were linked or arranged originally.

Using scanner configuration parameters and/or transformation rules it should be possible to filter out any unneeded links, leaving only the required links to be used by the Filesystem Importer.

The Alfresco Scanner allows extracting object such as document, folders and lists and saves this data to migration-center for further processing. The key features of Alfresco Scanner are:

Extract documents, folders, custom lists and list items

Extract content, metadata

Extract documents versions

Last version content is missing online edits when cm:autoVersion was false and then it's switched to true before scanning (#55983)

The Alfresco connectors are not included in the standard migration-center Jobserver but it is delivered packaged as Alfresco Module Package (.amp) which has to be installed in the Alfresco Repository Server. This amp file contains an entire Jobserver that will run under the Alfresco's Tomcat, and contains only the Alfresco connectors in it. For using other connectors please install the regular Server Components as it is described in the and use that one.

The following versions of Alfresco are supported (on Windows or Linux): 4.0, 4.1, 4.2, 5.2, 6.1.1, 6.2.0, 7.1, 7.2, 7.3.1. Java 1.8 is required for the installation of Alfresco Scanner.

To use the Alfresco Scanner, your scanner configuration must use the Alfresco Server as a Jobserver, with port 9701 by default.

The first step of the installation is to copy mc-alfresco-adaptor-<version>.amp file in the “amps-folder” of the alfresco installation.

The last step is to finish the installation by installing the mc-alfresco-adaptor-<version>.amp file as described in the Alfresco documentation:

Before doing this, please backup your original alfresco.war and share.war files to ensure that you can uninstall the migration-center Jobserver after successful migration. This is the only way at the moment as long the Module Management Tool of Alfresco does not support to remove a module from an existing WAR-file.

The Alfresco-Server should be stopped when applying the amp-files. Please notice that Alfresco provides files for installing the amp files, e.g.:

C:\Alfresco\apply_amps.bat (Windows)

/opt/alfresco/commands/apply_amps.sh (Linux)

The Alfresco Scanner can be uninstalled by following steps:

Stop the Alfresco Server.

Restore the original alfresco.war and share.war which have been backed up before Alfresco Scanner installation

Remove the file mc-alfresco-adaptor-<version>.amp from the “amps-folder”

To create a new Alfresco Scanner, create a new scanner and select Alfresco from the Adapter Type drop-down. Once the adapter type has been selected, the Parameters list will be populated with the parameters specific to the selected adapter type. Mandatory parameters are marked with an *.

The Properties of an existing scanner can be accessed after creating the scanner by double-clicking the scanner in the list, or selecting the Properties button/menu item from the toolbar/context menu. A description is always displayed at the bottom of the window for the selected parameter.

Multiple scanners can be created for scanning different locations, provided each scanner has a unique name.

The common adaptor parameters are described in .

The configuration parameters available for the Alfresco Scanner are described below:

username*

User name for connecting to the source repository. A user account with admin privileges must be used to support the full Alfresco functionality offered by migration-center.

Example: Alfresco.corporate.domain\spadmin

password*

Password of the user specified above

Parameters marked with an asterisk (*) are mandatory.

The OnBase Importer is one of the target connectors available in migration-center starting with version 3.17. It takes the objects processed in migration-center and imports them into an OnBase platform.

The current importer features are:

Import documents

Set custom metadata

Import document revisions

Delta migration (only for metadata)

The importer cannot use a service account since that's not supported by Unity API

An additional OnBase license might be required, because migration-center uses Unity API to ingest the data

Windows Authentication should be disabled in the OnBase Application server, because the importer uses OnBase Authentication

Click on the New Importer button to build a new OnBase Importer job and pick "OnBase" from the list of connectors. Once the adapter type has been selected, the Parameters list will be populated with the parameters specific to the selected adapter type, in this case OnBase.

The common adaptor parameters are described in .

The configuration parameters available for the OnBase importer are described below:

username* OnBase username. It must be a valid Hyland OnBase account. Service accounts are not supported.

password* The user's password.

serverUrl* The OnBase server URL. Example: http://example/AppServer/Service.asmx

datasource* The data set (repository) where the content should be imported.

Parameters marked with an asterisk (*) are mandatory.

Documents are part of the application data model in OnBase. They are usually represented by a document type, content, file_format, and some specific keywords.

OnBase Importer allows importing documents records from any supported source system to OnBase. For that, a migset of type “<Source>toOnBase(document)” must be created. Ex: Importing documents from Documentum requires migsets of type "DctmtoOnBase(document)”.

file_format* The OnBase file format corresponding to the established content. Example: Text Report Format

mc_content_location Optional rule for importing the content from another location than the one exported by the scanner. If not set, the source objects content_location will be used.

target_type* The name of the OnBase document type corresponding to a migration-center internal object type that is used in the association. Example: OnBase Document

Rules marked with an asterisk (*) are mandatory.

A mapping list may be used to set the file format with ease and intuition.

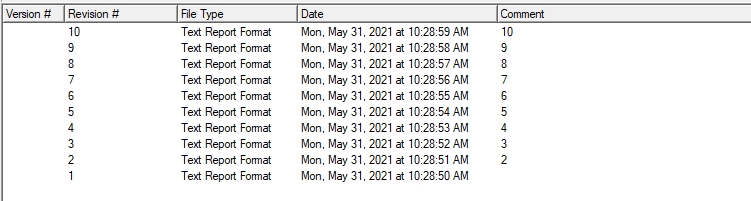

Revisions are part of the application data model in OnBase. Compared to other platforms, OnBase uses “Revisions” that are similar to the very popular concept of versions. The OnBase platform also has “Versions” but uses it in a different way: Versions are “stamped Revisions” which can have a version description.

OnBase Importer allows importing revisions records from any supported source system to Hyland OnBase. For that, a migset of type “<Source>toOnBase(document)” must be created. Ex: Importing revision from CSV requires migsets of type "CsvExceltoOnBase(document)”.

The user needs at least one version tree to be scanned to import revisions. Each scanned version is equivalent to a revision in OnBase.

In the example bellow we have mapped level_in_version_tree to the revision description using the optional revision comment system rule to easily describe the revision correlation of Onbase.

Objects that have changed in the source system since the last scan are scanned as update objects. Whether an object in a migration set is an update or not can be seen by checking the value of the Is_update column – if it’s 1, the current object is an update to a previously scanned object (the base object). An updated object cannot be imported unless its base object has been imported previously.

Currently, update objects are processed by OnBase importer with the following limitations:

Only the keywords are updated for the documents and revisions.

New keywords for existing objects can be set by using delta migration.

The keywords for a document and its revisions are shared, so when updating keywords for a document or revision, all the documents will be updated.

3.17 version of OnBase Importer can only update the keywords of the existing documents or revisions.

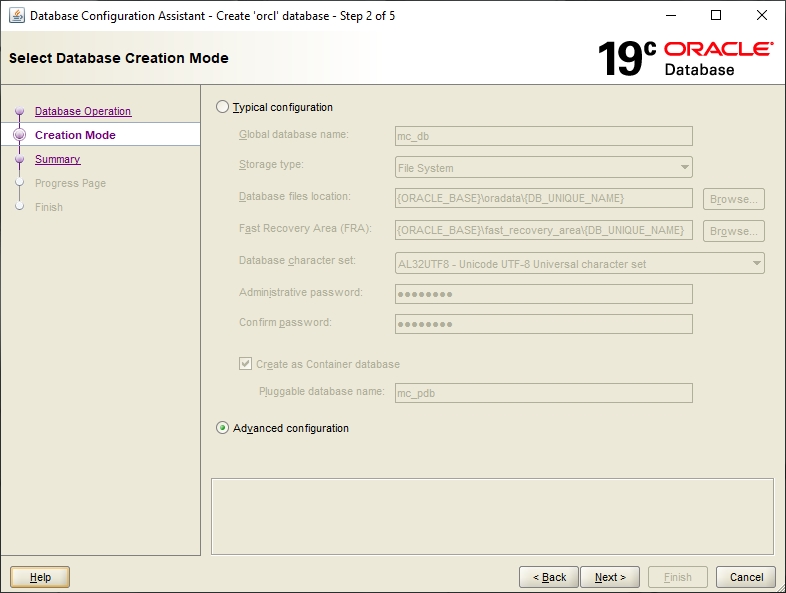

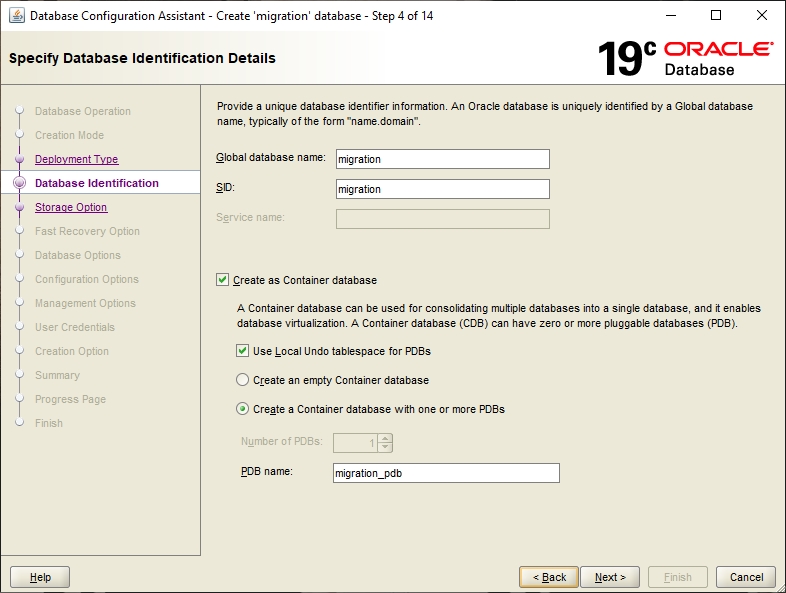

Migration-center uses an Oracle database to store information about documents, migration sets, users, jobs, settings and others.

This guide describes the process of creating an Oracle 19c (v19.3.0.0.0) database instance on Microsoft Windows. Even though the description is very detailed, the installing administrator is expected to have a good degree of experience in configuring databases.

<?xml version="1.0" encoding="UTF-8"?>

<contentattributes>

<attribute name="keywords" value="Benchmark" />

<attribute name="keywords" value="Technical" />

<attribute name="reference_period" value="26.11.2001" />

<attribute name="reference_period_from" value="26.11.2001" />

<attribute name="reference_period_to" value="01.01.2100" />

</contentattributes>scanLocations

The entry point(s) in the Alfresco repository where the scan starts.

Multiple values can be entered by separating them with the “|” character.

Needs to follow the Alfresco Repository folder structure, ex:

/Sites/SomeSite/documentLibrary/Folder/AnotherFolder

/Sites/SomeSite/dataLists/02496772-2e2b-4e5b-a966-6a725fae727a

Valid scan locations: an entire site, a specific library, a specific folder in a library, a specific data list.

If one location is invalid the scanner will report an appropriate error to the user so it will not start.

contentLocation*

Folder path. The location where the exported object content should be temporary saved. It can be a local folder on the same machine with the Job Server or a shared folder on the network. This folder must exist prior to launching the scanner. The Jobserver must have write permissions for the folder. This path must be accessible by both scanner and importer so if they are running on different machines, it should be a shared folder.

exportLatestVersions

This parameter specifies how many versions from every version tree will be exported starting from the latest version to the older versions. If it is empty, not a valid number, 0 or negative or greater than the latest "n" versions, all versions will be exported.

exportContent

Setting this parameter to true will extract the actual content of the documents during the scan and save it in the contentLocation specified earlier.

This setting should always be checked in a production environment.

dissolveGroups

Setting this parameter to true will cause every group permission to be scanned as the separate users that make up the group

excludeAttributes

List of attributes to be excluded from scanning. Multiple values can be entered by separating them with the “|” character.

loggingLevel*

See Common Parameters.

numberOfThreads* The number of threads that the importer will use to import the documents.

loggingLevel* See Common Parameters.

document_date Sets the Document Date.

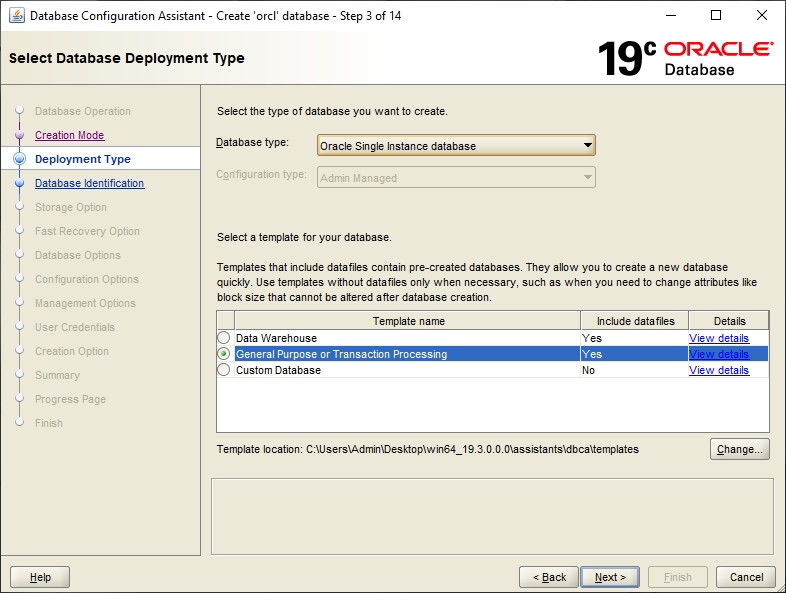

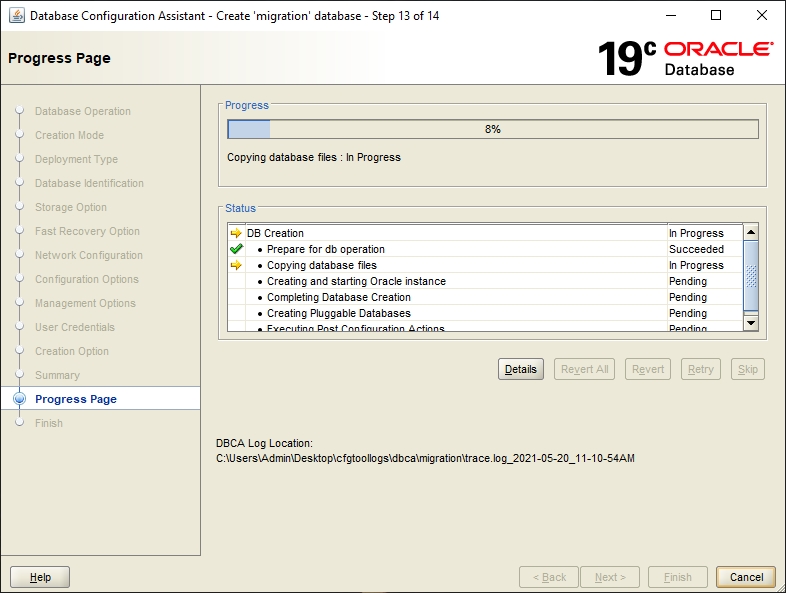

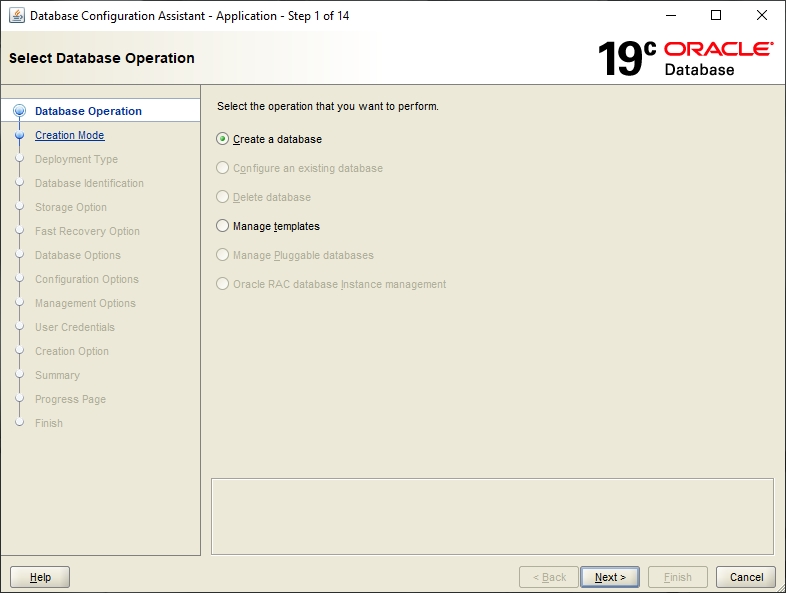

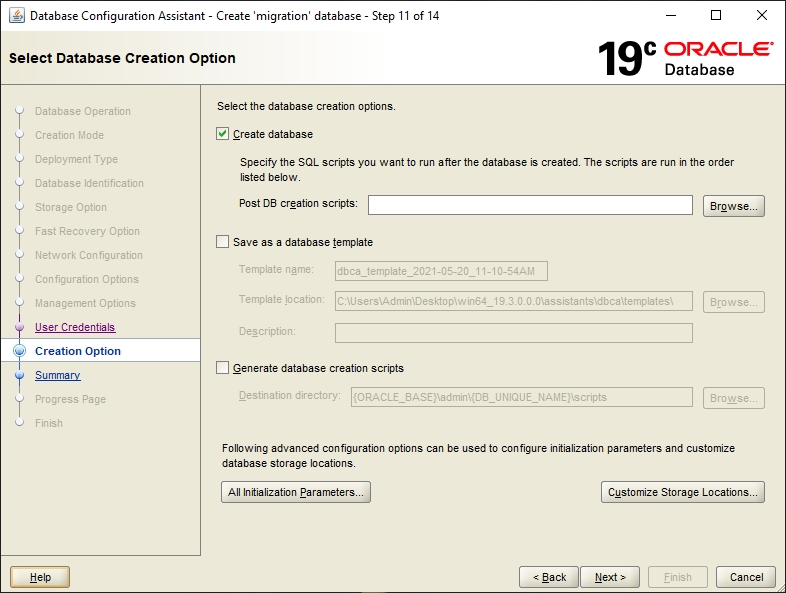

Open the Database Configuration Assistant from the Oracle program group in the Start menu. The assistant will guide you through the creation steps.

Select the Create Database option and click Next.

It is recommended to go with the Advanced configuration.

For the purpose of most installations Oracle Singla Instance Database and General Purpose is sufficient.

Define the database name here. The name must be unique, meaning that there should not be another database with this name on the network. The SID is the name of a database instance and can differ – if desired - from the global database name. In order to make an easy identification of the database possible, a descriptive name should be selected, for instance „migration“.

You can either create it as a Container DB with a Pluggable DB inside or not, depending on preference.

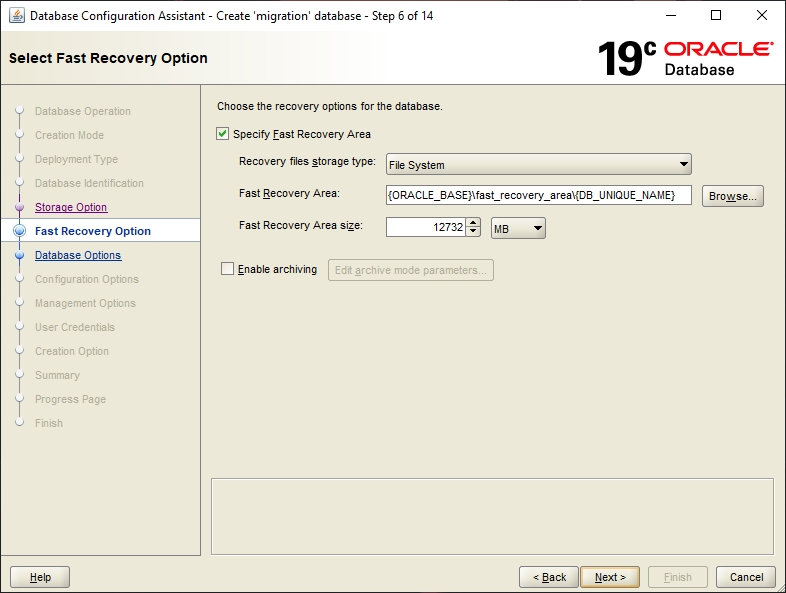

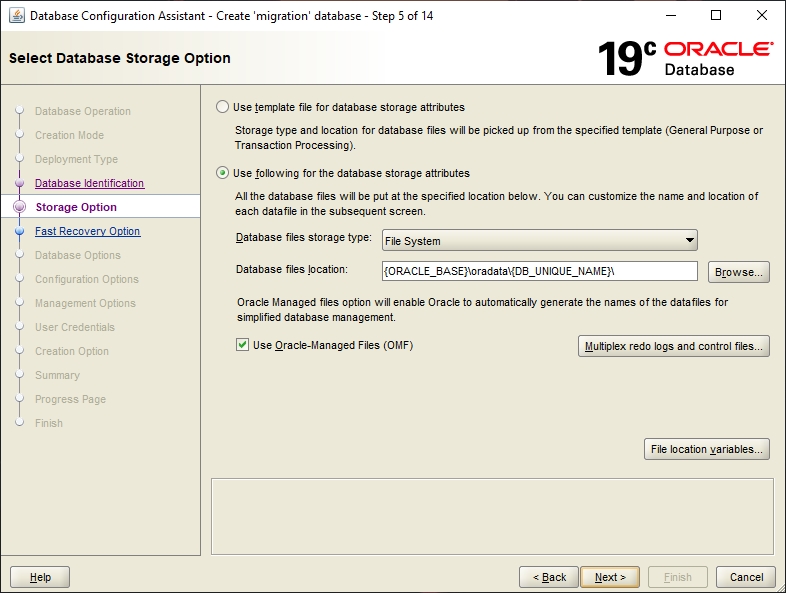

For use with migration-center the “File System” option is sufficient. For more information on the “ASM” method, please refer to the Oracle documentation.

This step describes the database's archiving and recovery modes. The default settings are fine here.

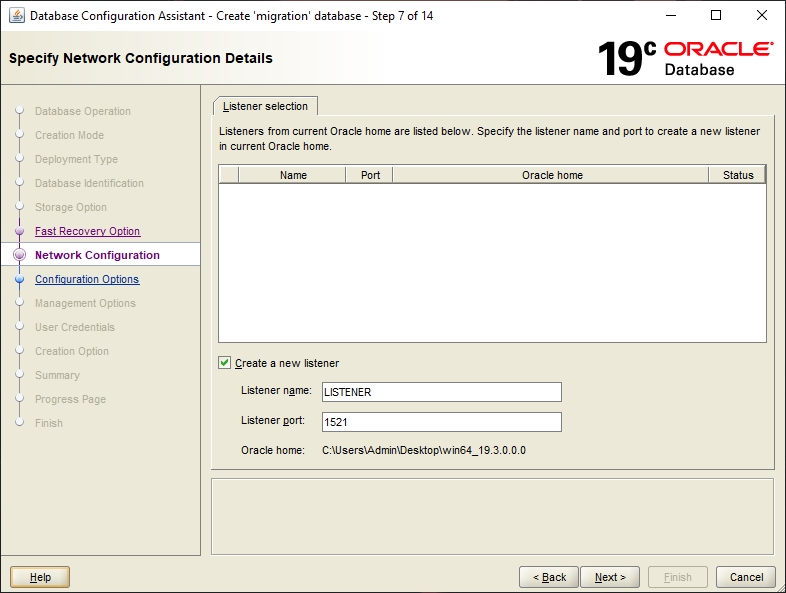

If a default listener is not already configured, you can select one here or choose to create a new one:

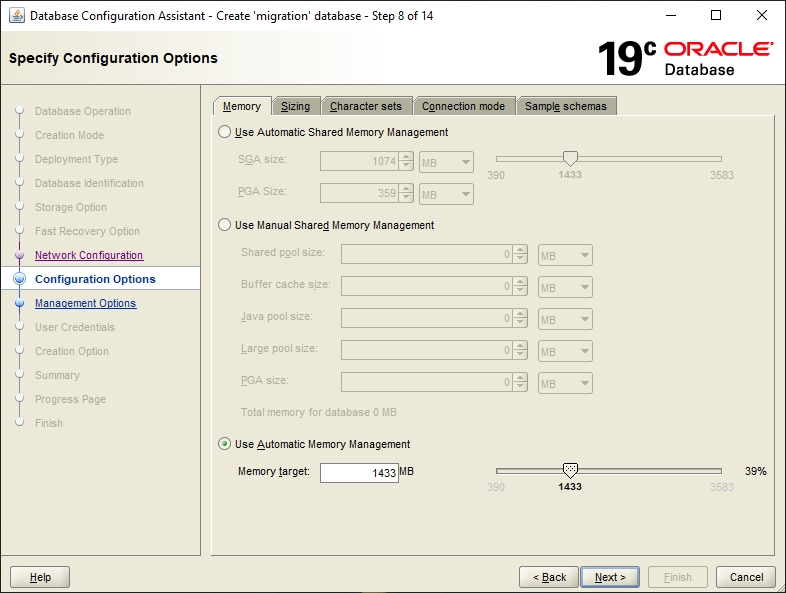

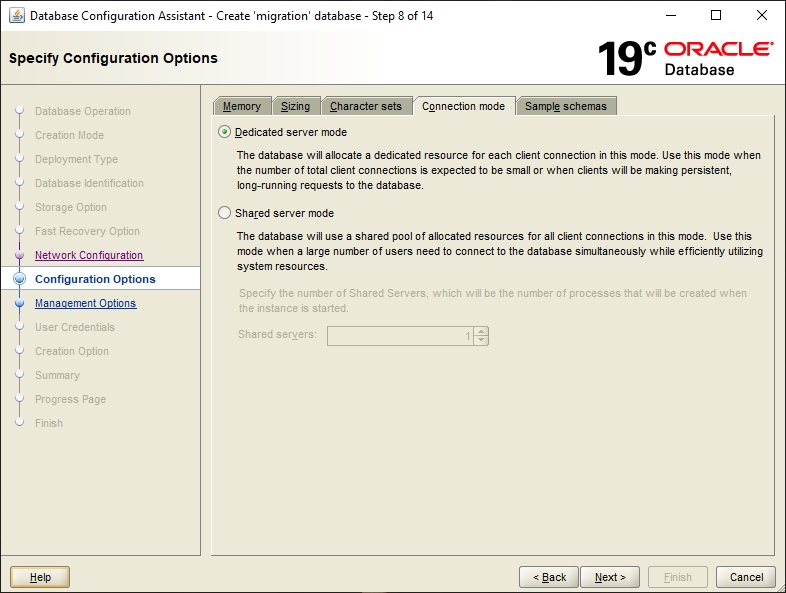

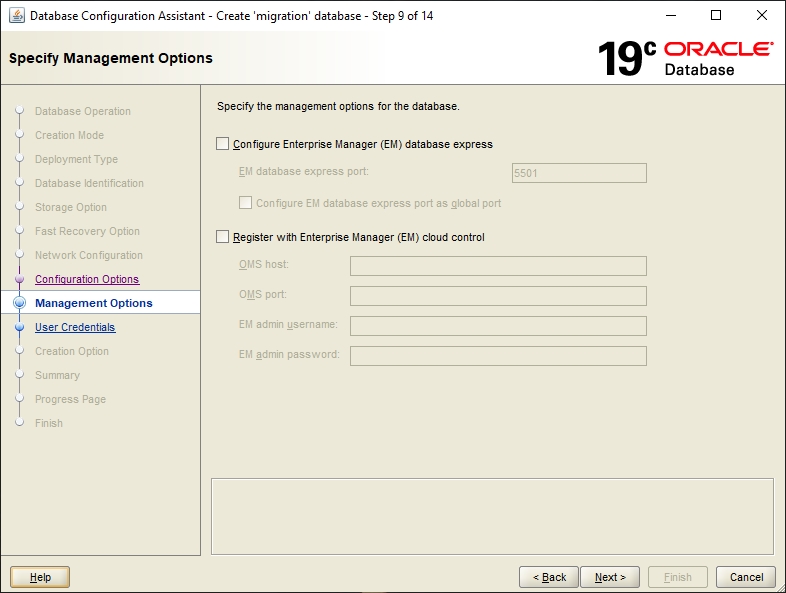

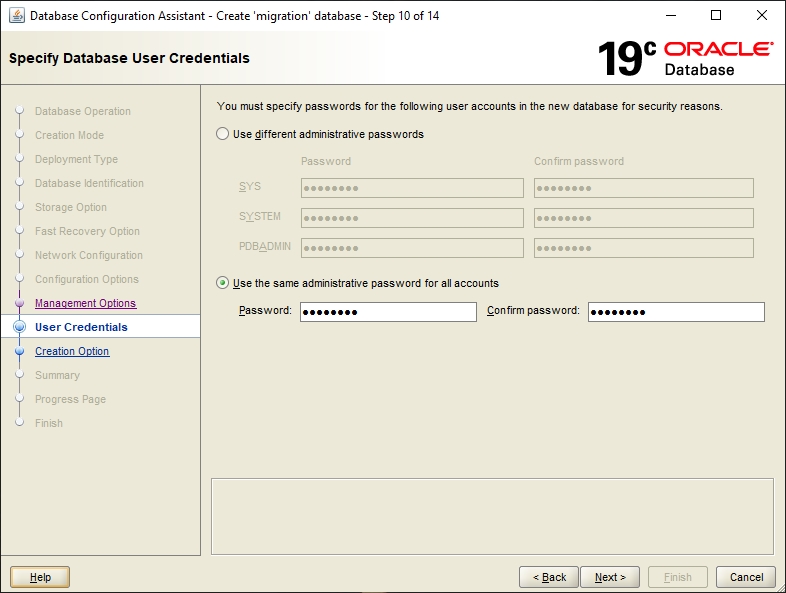

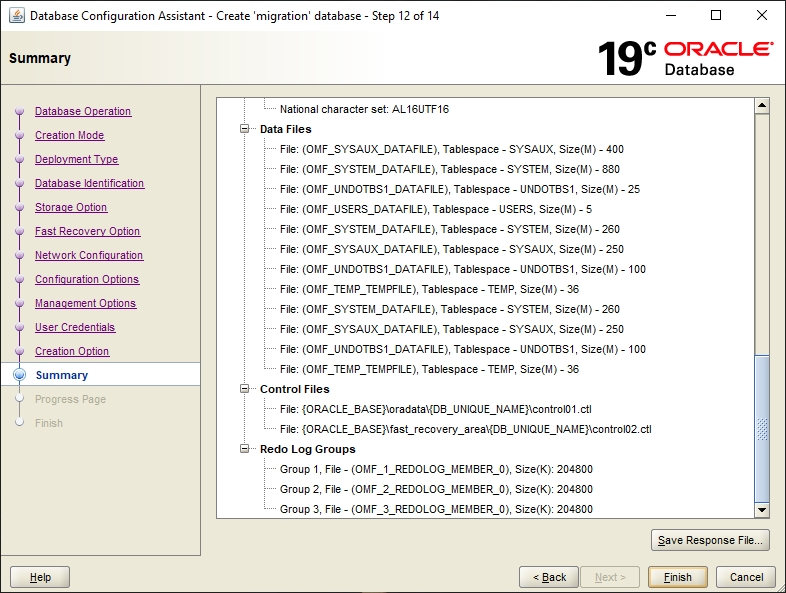

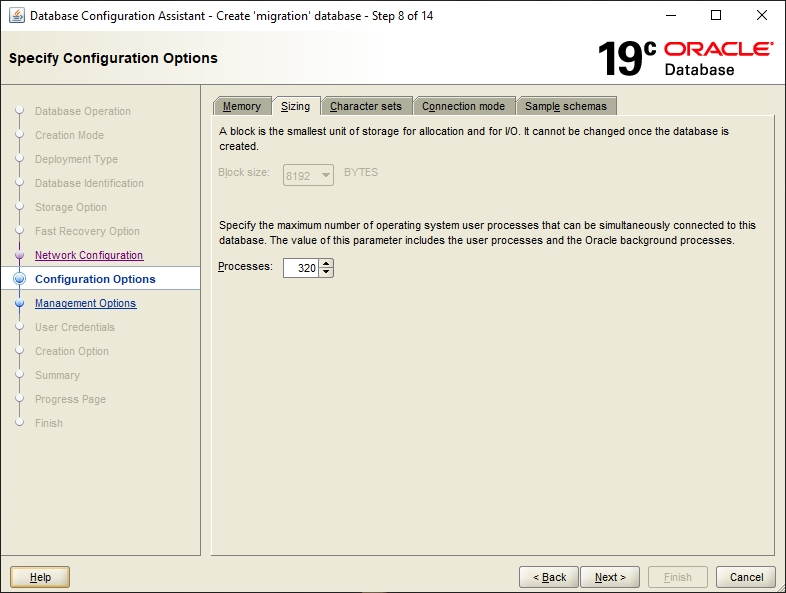

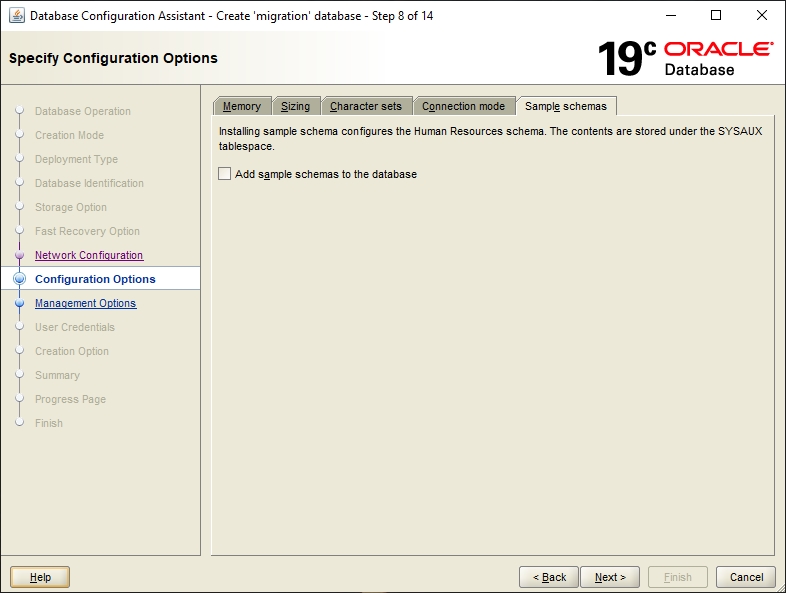

This step contains several tabs on which the configuration options for the new database must be specified.

The memory values of the database need to be specified here. For migration-center, user-defined settings are required. Please review the Database chapter the Sizing Guide for the recommended amount of memory allocated to the database instance.

For most projects Automatic Memory Management is a good option.